How do we learn to perform complex skills like programming, physics, or piloting a plane? What changes in our brain allow us to perform these skills? How much does learning one thing help us learn something else?

These are hard questions. Most experiments only attempt to address narrow slices of the problem. How does the schedule of studying impact memory? Is it better to re-read or retrieve? Should you practice a whole skill, or build up to it from its parts? These don’t directly address the big questions.

This is what makes John Anderson’s ACT-R theory so ambitious.1 It’s an attempt to synthesize a huge amount of work in psychology to form a broad picture of how we learn complicated skills. Even if the theory turns out not to be the whole story, it helps illuminate our understanding of the problem.

First, A Note on Scientific Paradigms

Before I get to explaining ACT-R, I need to step back and talk about how to think about scientific theories, in general.

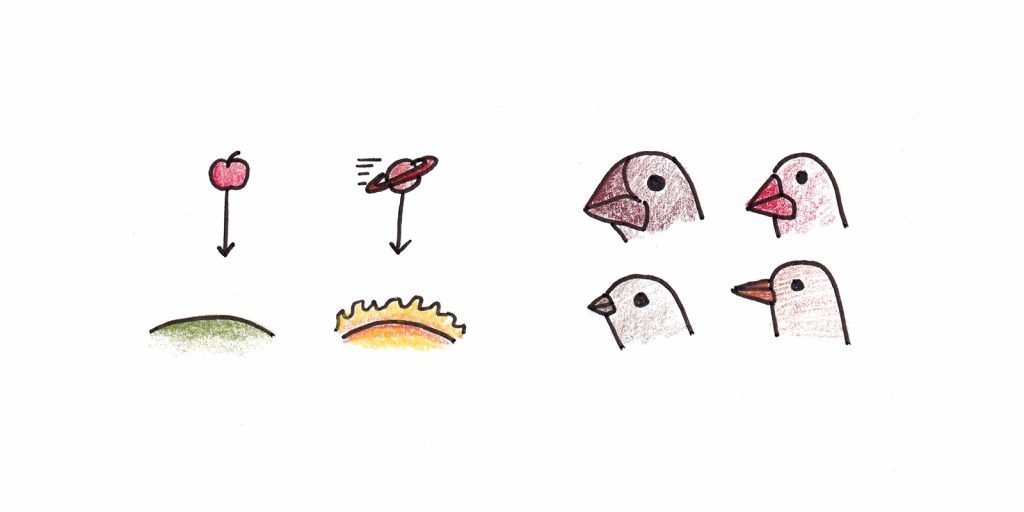

All scientific theories are built on paradigms. A paradigm is an example that you take as central for describing a phenomenon. Newton had falling apples and orbiting planets. Darwin had the beaks of finches. Obviously, Newton didn’t restrict his theory to tumbling fruit, nor Darwin to a few island-dwelling birds. Yet, these were the examples they used to lay the foundations for their broader theories.

Nowadays, we take for granted that mechanics and evolution are Scienceâ„¢, and thus their original paradigm cases are largely historical footnotes.2 We can ignore their origins and simply focus on applying the theory.

Theories of the mind aren’t like this. Nobody believes we’ve found some unified theory that fully explains how the mind works. Yet we know a lot more than nothing. Scientists have collected mountains of evidence that sharply constrain any valid theory of the mind. But minds are complicated, and there are still a lot of possible theories that could fit.

With this in mind, what does ACT-R take to be its paradigm case for cognitive skill? ACT-R focuses on problem-solving, particularly in well-defined domains like algebra or programming. Specifically, its focus is on intellectual skills. ACT-R does not account for how we move our bodies nor how we transform input from our eyes and ears to make sense of the problem.

This paradigm might not seem very representative. Certainly, most of human experience isn’t like programming LISP. Yet a good paradigm doesn’t need to be prototypical of the phenomena it tries to model. In fact, often the opposite is true, with the best paradigms being unusual because they lack the messiness of more typical situations. Newtonian mechanics had to overcome the fact that we’re immersed in an atmosphere where friction is ubiquitous, and most objects eventually come to rest. Darwin needed the peculiar environment of the Galapagos where finches would adapt to unique niches in relative isolation.

Within this paradigm, ACT-R makes some impressive predictions and manages to account for a huge body of psychological data. Therefore, I think it’s worth understanding seriously if we want a better picture of how we learn things.

ACT-R Basics: Declarative and Procedural Memory Systems

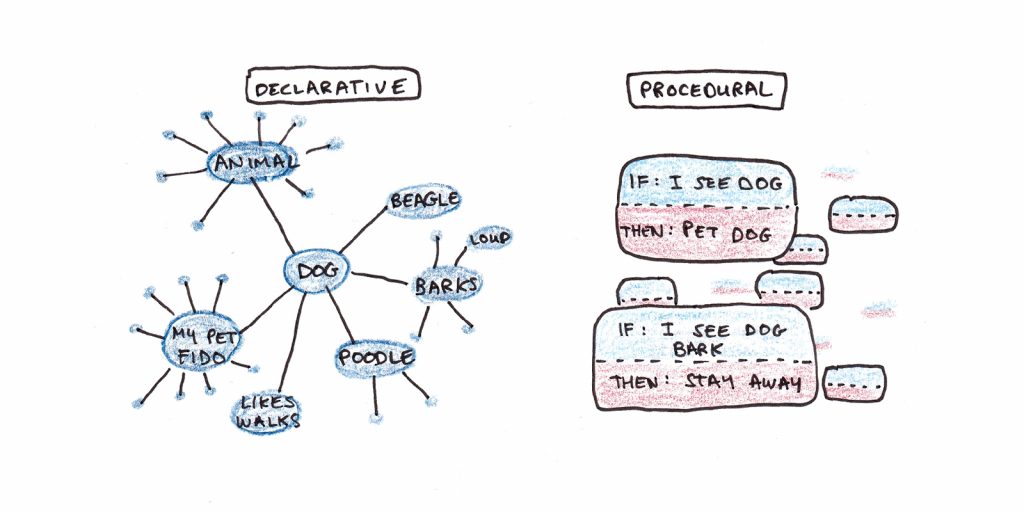

ACT-R argues that we have two different memory systems: declarative and procedural.

The declarative system includes all your memories of events, facts, ideas and experiences. Everything you consciously experience is part of this system. It contains both your direct sensory experience and your knowledge of abstract concepts.

The procedural system consists of everything you can do. It includes both motor skills, like tying a shoelace or typing on a keyboard, and mental skills, like adding up numbers or writing an email.

ACT-R explains complex skills as an ongoing interaction between these two systems. The declarative system represents the outside world, your inner thoughts and intentions. The procedural system acts on those representations to make overt actions or internal adjustments that move you closer to your goals.

Why two separate systems? A single system would be simpler. But there’s an impressive range of evidence arguing that these systems are distinct in the brain:

- Amnesiacs can learn through the procedural system, but not the declarative system. They can learn to perform new tasks, but afterward, they have no memory of having been taught.

- Priming experiments only work with the declarative system, and not the procedural. For example, presenting the word “computer” speeds up how quickly people can access computer-related memories. Still, it does not make them any faster at using a computer.

- Procedural memory is unidirectional. This is why saying the alphabet forward is so much easier than saying it backward. To go backward you need to produce the letters going forward, rehearse them in your head so that a declarative representation is active, and finally manipulate them to reverse the order.

- Declarative memory shows fan effects. In declarative memory, links between nodes go both ways, and either node can access the other. However, a node with many outgoing links doesn’t link to any particular one of them strongly. For example, a picture of a beagle makes it easy to recall “dog,” but the word “dog” likely won’t cause you to recall the picture of a specific beagle.

- Neuroscientific studies suggest different locations for the two systems. The hippocampus and associational cortices play pivotal roles in declarative memory. In contrast, the procedural system seems to rely on subcortical structures such as the basal ganglia and dopamine networks.

The Declarative System

The basic unit of declarative memory is the chunk. This is a structure that binds approximately three pieces of information. The exact contents will vary depending on the chunk, but they’re assumed to be simple. “Seventeen is a number” might be an English description of a chunk that has three elements: [17][IS A][NUMBER].

Since chunks are so rudimentary, how do we understand anything complicated? The idea is that, through experience, we connect these chunks into elaborate networks. We can then traverse these networks to get information as we need it.

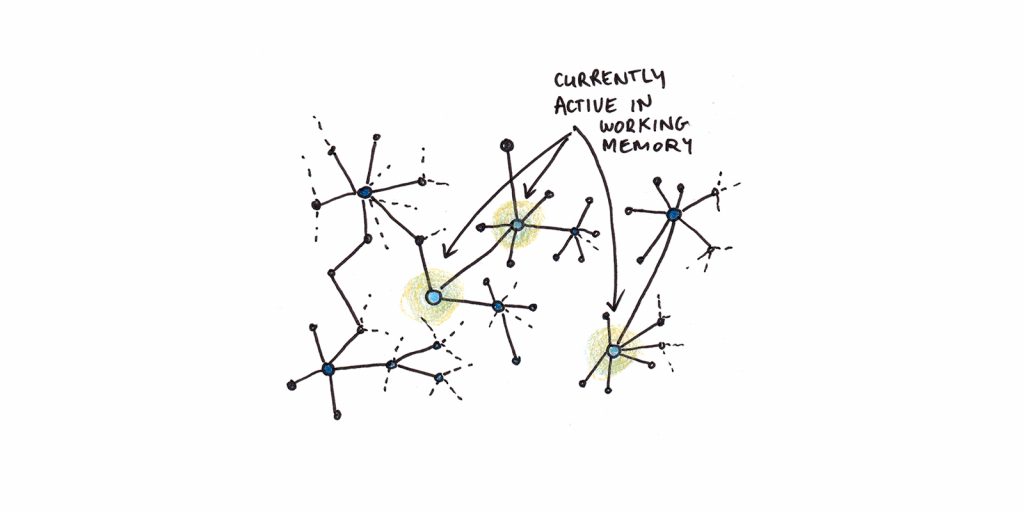

The declarative memory structure is vast, but only a few chunks are active at any one time. This reflects the distinction between conscious awareness and memory. When we need to remember something, we search through the network. For practiced memories, this is relatively easy as most related ideas will only be a step or two away. For new ideas, this is much harder since they’re less well integrated into our other knowledge and therefore require more effortful search processes.

How do nodes get activated? There are three sources:

- First, you perceive things from the outside world that automatically activate nodes in memory. (Perhaps you see a dog, and some set of dog-related nodes get activated.)

- Second, you can rehearse things internally to maintain them in memory. Think of trying to remember someone’s phone number to write it down. You might repeat it to yourself to “refresh” the auditory experience that is no longer present.

- Third, nodes can activate connected nodes. This is what happens when one thought leads to another. The exact details of this spreading activation mechanism is not entirely clear in ACT-R. But Anderson assumes that which chunks tend to be active is related to their likely usefulness in the situation.

The declarative system, with its vast hidden network of long-term memory and briefly active nodes corresponding to our conscious awareness is impressive. In order to solve problems, we use this system to make decisions about what to do next. But, according to ACT-R, it’s also inert. Something else must transform it into action. That’s where the procedural system comes in.

The Procedural System

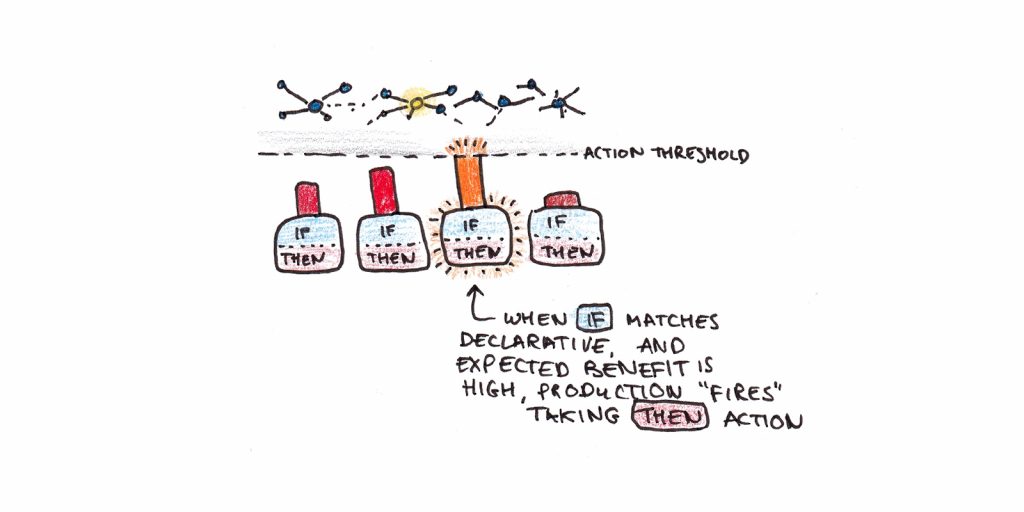

The basic unit of the procedural system is the production. This is an IF -> THEN pattern. To illustrate, a production might be “IF my goal is to solve for x, and I have the equation ax = b, THEN rewrite the equation as x = b/a.”

Think of productions like the atomic thinking steps involved in solving a problem. Whenever you need to take an action or make a decision, ACT-R models this as a production activating.

Unlike the sprawling, interlinked declarative memory, productions are modular. Each one acts as an isolated unit that is learned and strengthened independently. Solving complex problems involves more productions than simple puzzles, but the basic ingredients are the same.

For each active representation in declarative memory, many different productions compete to find the best match for the current situation. When a production matches an active representation in declarative memory, and the expected value from executing this production exceeds the expected cost of either taking a different action or waiting, it triggers. The action is taken, the state of the world (or your internal mental state) changes and then the process repeats itself.

If you repeat a sequence of actions many times, this sequence can be consolidated into a single production. Thus, only one production has to be activated to execute the entire series of mental steps from start to finish. While this is faster, it is also less flexible. With time, this can result in skills that are less transferable to new situations as you’ve automated specific solutions for specific problems, rather than implementing the general procedure from scratch each time.

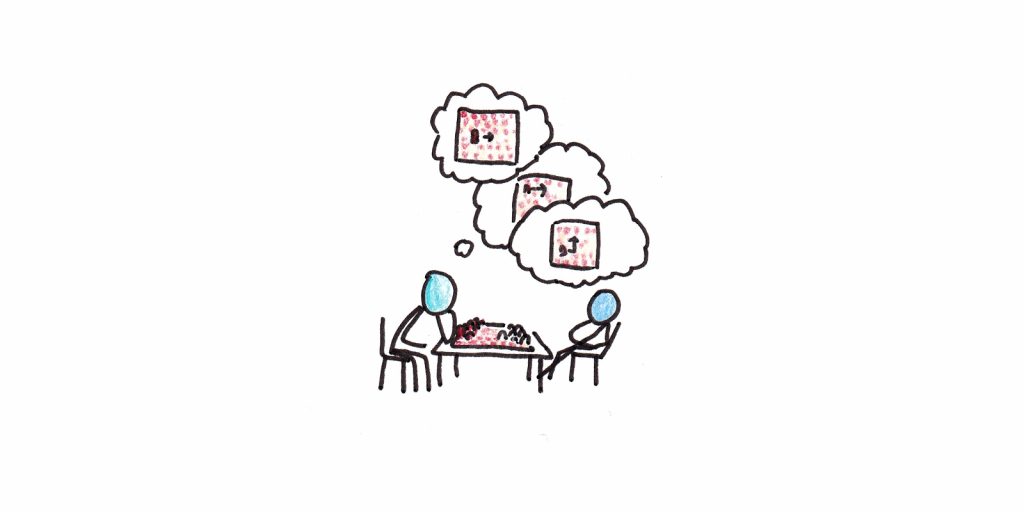

How ACT-R Claims You Solve Problems

Let’s summarize the overall process of reasoning presented here:

- You form a representation of the problem in your declarative system. This representation combines your current sensory experience with long-term memory and short-term rehearsal buffers.

- Productions compete with each other based on this current representation. Which one wins depends on how well it matches with an active chunk in declarative memory and the benefit you expect from taking that action.

- As each production is executed, it changes your present state. You either take action in the outside world, which will change your declarative representation via sensory channels, or the production changes your internal state.

- The process repeats itself until the problem is solved.

How Do We Acquire Skills?

In the ACT-R theory, learning skills is thought to be a process of acquiring and strengthening productions.

Initially, productions are learned via analogy. We search our long-term declarative memories for a similar problem. Then we try to match this to our current representation of the problem. When we have a match, we create a production.

ACT-R argues that we don’t learn by explicit instruction, only by example. When we appear to learn via instruction, we first generate an example based on the instruction and then use this example to create a new production.

Once created, productions are strengthened through use. Every time a production is used to solve a problem, it becomes more likely to be chosen again in similar circumstances. The strengthening process is incredibly slow. This is why it can take so much practice to be good at complicated skills. Getting all of the productions to fire fluently requires enormous repetition and fine-tuning.

According to ACT-R, the only thing that matters for solving a problem is reaching the solution, not how you got there. Mistakes in the process waste time and do not contribute to learning. Thus Anderson advocates for intelligent tutors who immediately correct mistakes in the process of solving a problem.

Transfer of Learning

ACT-R makes robust predictions about learning and transfer that are supported by quite a bit of data.

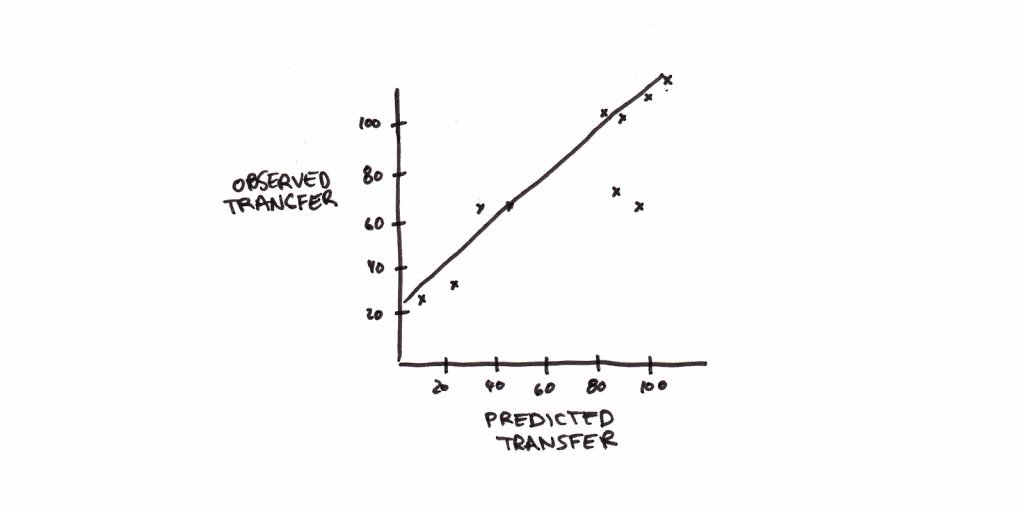

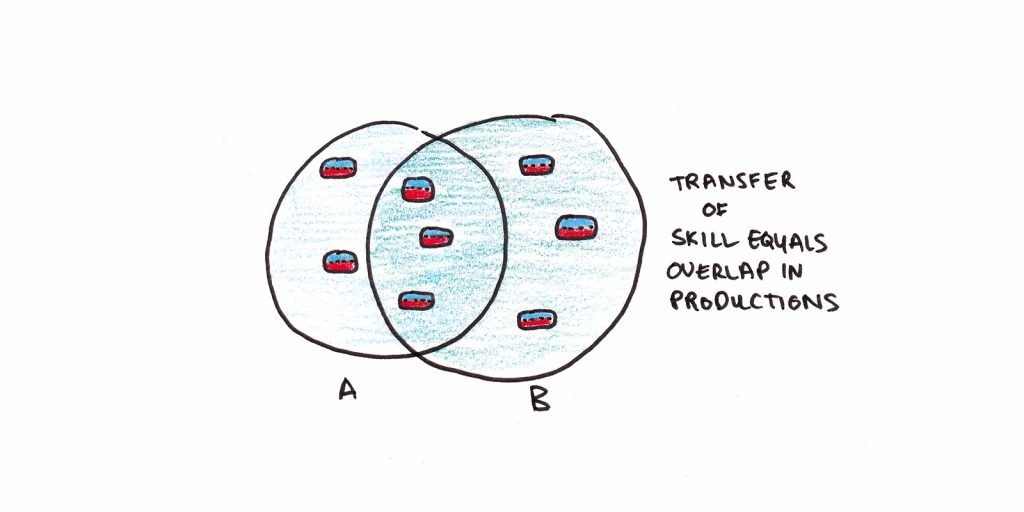

A key prediction is that the amount of transfer we predict between two different skills will depend on the number of productions they share in common. How well does it do?

The following graph is reproduced from Anderson’s Rules of the Mind. The prediction is that transfer should be linear in the number of productions. This is exactly what we see.3 To my mind, this is some of the best evidence that ACT-R, or something like it, accounts for the transfer of cognitive skills.

However, transfer is somewhat better than ACT-R predicts. The fit is linear, but it starts around 26%. If skills truly had no shared productions, the theory suggests the fit should start at 0%. Anderson argues that this is probably because their model of skills omitted some productions. For instance, there may be some productions involved in using the computer that transfer between every task that uses the same system.

What about more complicated skills? ACT-R argues that more abstract, higher-level skills should transfer better between situations because the underlying productions are preserved. So the skill of designing an algorithm should transfer between different programming languages because the algorithm is the same in each case, even if the skill of writing the syntax to code the algorithm won’t.

What about knowledge and understanding? ACT-R has less to say here. The types of puzzles used to test ACT-R theory tend not to require a lot of background knowledge. Since the declarative part of learning is relatively quick in the demonstrations studied, it doesn’t dominate the transfer situation. For knowledge-rich domains like law or medicine, there may be different patterns of transfer as properly activating the declarative memory system becomes the most time-consuming part of acquiring a skill.

Over a century ago, Edward Thorndike proposed that the only transfer we could expect between skills was due to them containing identical elements. ACT-R is essentially a revised version of this theory, arguing that the elements Thorndike sought are productions.

Implications of ACT-R

Nearly a year ago, I started digging into research on transfer. It turned out to be a much deeper and more interesting question than I had initially realized. Understanding what knowledge transfers depends critically on the answer to the question, “what is actually learned through experience?”

ACT-R makes a bold claim regarding this central question: The basic units of skill are productions. Practice generates new productions and strengthens old ones. Skills transfer to the degree to which these productions overlap.

There are a few general implications we can tease out:

- Most skills will be highly specific. Chess strategy doesn’t transfer to business strategy because they have almost no productions in common. A microscopic analysis of any two skills should, in principle, tell us how much transfer is possible.

- Transfer should look smaller on tests of problem solving than on tests of future learning. To solve a problem you need all of the productions. Possessing half of the productions doesn’t help because you’re missing steps needed to move forward. However, having half of the productions will make learning twice as fast, because you don’t have as many new ones to acquire. School frequently fails to teach all of the skills needed for real-world performance. This can be embarrassing when you measure people’s proficiency on problems. But the result needn’t be gloomy: with further training, those people would likely quickly learn skills that use the same components.

- Practice makes perfect, but many types of practice can be wasteful. Anderson favors intelligent tutoring systems that immediately correct students when they make a mistake. Whether computers are up for this task is an open question, but human tutors are one of the most effective instructional interventions known.

- Complicated skills have simple learning mechanisms. Although the description of a production system may look complicated, it’s dead simple compared to the diversity of skills we regularly perform. Positing simple mechanisms suggests that even the most complex performances can, in theory, be broken down into learnable parts. The only difficulty is investing all the time needed to learn them.

In the next essay, I will examine Walter Kintsch’s Construction-Integration model. Whereas ACT-R uses defined problem-solving as its central example, CI delves into the process we use for comprehending text as its paradigm. Both models have considerable overlap, which is reassuring in light of the volume of psychological data. Still, they have some interesting contrasts as well.

Footnotes

- ACT-R stands for Adaptive Control of Thought – Rational. It’s an advancement of Anderson’s earlier ACT* (pronounced act star) model. Unless otherwise stated, all material comes from Anderson’s book describing the ACT-R system Rules of the Mind.

- I’m neglecting relativity here, but Newtonian mechanics is still considered a great approximation.

- The two weird outliers below the line are from experiments where researchers tried to create negative transfer. They did this by having people learn one text-editor, and then getting them to use a made-up one which had many of the commands swapped around. This demonstrates that sometimes old skills can interfere with new learning, but the fact that transfer was still positive suggests interference is not as big a deal as some theories of learning would argue.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.