A mental model is a general idea that can be used to explain many different phenomena. Supply and demand in economics, natural selection in biology, recursion in computer science, or proof by induction in mathematics—these models are everywhere once you know to look for them.

Just as understanding supply and demand helps you reason about economics problems, understanding mental models of learning will make it easier to think about learning problems.

Unfortunately, learning is rarely taught as a class on its own—meaning most of these mental models are known only to specialists. In this essay, I’d like to share the ten that have influenced me the most, along with references to dig deeper in case you’d like to know more.

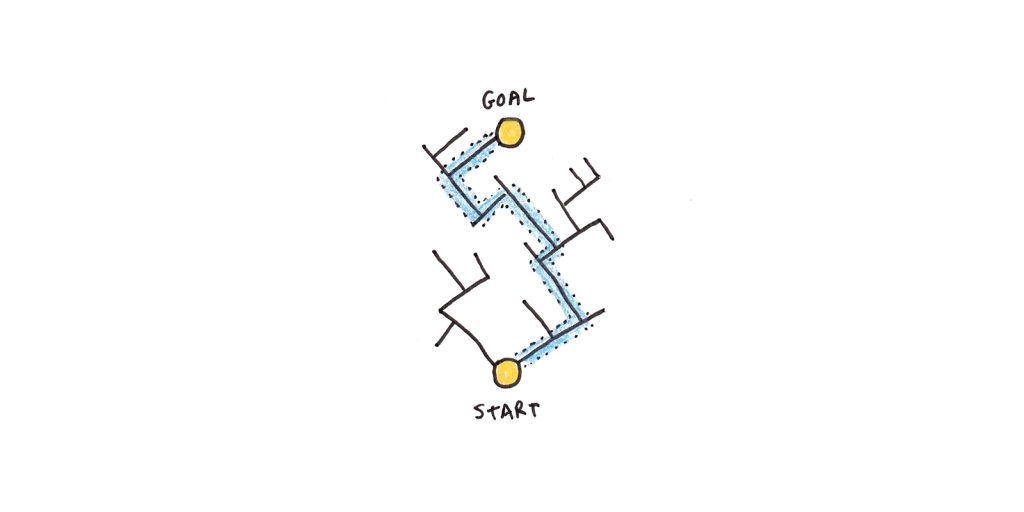

1. Problem solving is search.

Herbert Simon and Allen Newell launched the study of problem solving with their landmark book, Human Problem Solving. In it, they argued that people solve problems by searching through a problem space.

A problem space is like a maze: you know where you are now, you’d know if you’ve reached the exit, but you don’t know how to get there. Along the way, you’re constrained in your movements by the maze’s walls.

Problem spaces can also be abstract. Solving a Rubik’s cube, for instance, means moving through a large problem space of configurations—the scrambled cube is your start, the cube with each color segregated to a single side is the exit, and the twists and turns define the “walls” of the problem space.

Real-life problems are typically more expansive than mazes or Rubik’s cubes—the start state, end state and exact moves are often not clear-cut. But searching through the space of possibilities is still a good characterization of what people do when solving unfamiliar problems—meaning when they don’t yet have a method or memory that guides them directly to the answer.

One implication of this model is that, without prior knowledge, most problems are really difficult to solve. A Rubik’s cube has over forty-three quintillion configurations—a big space to search in if you aren’t clever about it. Learning is the process of acquiring patterns and methods to cut down on brute-force searching.

2. Memory strengthens by retrieval.

Retrieving knowledge strengthens memory more than seeing something for a second time does. Testing knowledge isn’t just a way of measuring what you know—it actively improves your memory. In fact, testing is one of the best study techniques researchers have discovered.

Why is retrieval so helpful? One way to think of it is that the brain economizes effort by remembering only those things that are likely to prove useful. If you always have an answer at hand, there’s no need to encode it in memory. In contrast, the difficulty associated with retrieval is a strong signal that you need to remember.

Retrieval only works if there is something to retrieve. This is why we need books, teachers and classes. When memory fails, we fall back on problem-solving search which, depending on the size of the problem space, may fail utterly to give us a correct answer. However, once we’ve seen the answer, we’ll learn more by retrieving it than by repeatedly viewing it.

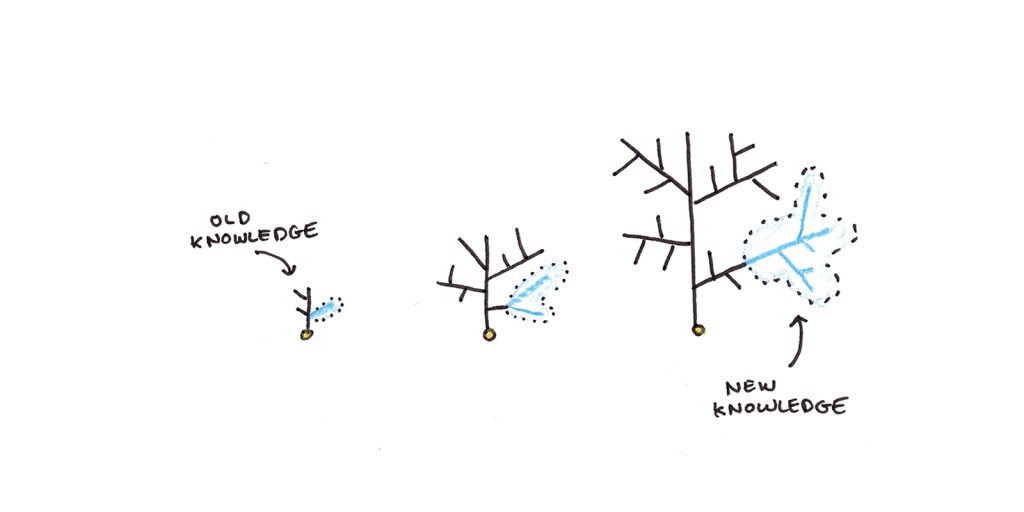

3. Knowledge grows exponentially.

How much you’re able to learn depends on what you already know. Research finds that the amount of knowledge retained from a text depends on prior knowledge of the topic. This effect can even outweigh general intelligence in some situations.

As you learn new things, you integrate them into what you already know. This integration provides more hooks for you to recall that information later. However, when you know little about a topic, you have fewer hooks to put new information on. This makes the information easier to forget. Like a crystal growing from a seed, future learning is much easier once a foundation is established.

This process has limits, of course, or knowledge would accelerate indefinitely. Still, it’s good to keep in mind because the early phases of learning are often the hardest and can give a misleading impression of future difficulty within a field.

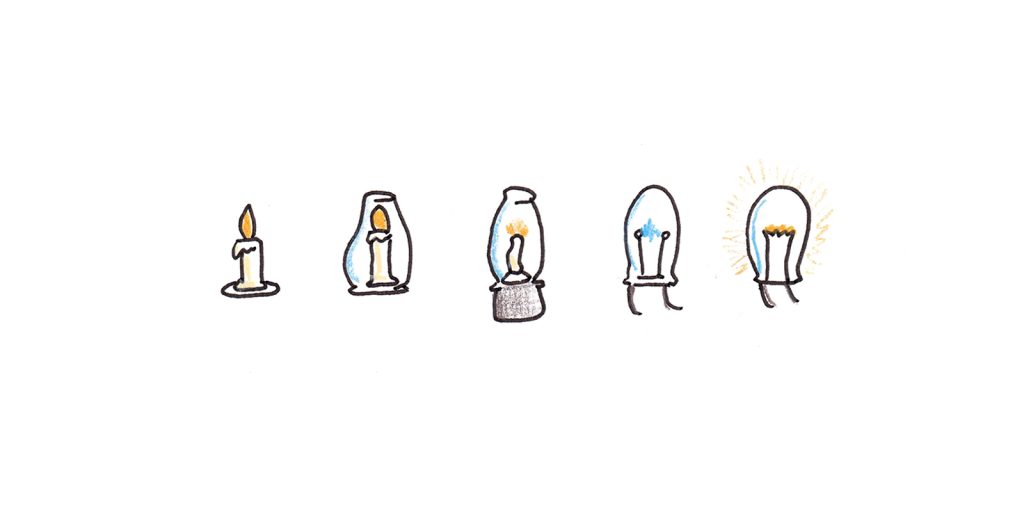

4. Creativity is mostly copying.

Few subjects are so misunderstood as creativity. We tend to imbue creative individuals with a near-magical aura, but creativity is much more mundane in practice.

In an impressive review of significant inventions, Matt Ridley argues that innovation results from an evolutionary process. Rather than springing into the world fully-formed, new invention is essentially the random mutation of old ideas. When those ideas prove useful, they expand to fill a new niche.

Evidence for this view comes from the phenomenon of near-simultaneous innovations. Numerous times in history, multiple, unconnected people have developed the same innovation, which suggests that these inventions were somehow “nearby” in the space of possibilities right before their discovery.

Even in fine art, the importance of copying has been neglected. Yes, many revolutions in art were explicit rejections of past trends. But the revolutionaries themselves were, almost without exception, steeped in the tradition they rebelled against. Rebelling against any convention requires awareness of that convention.

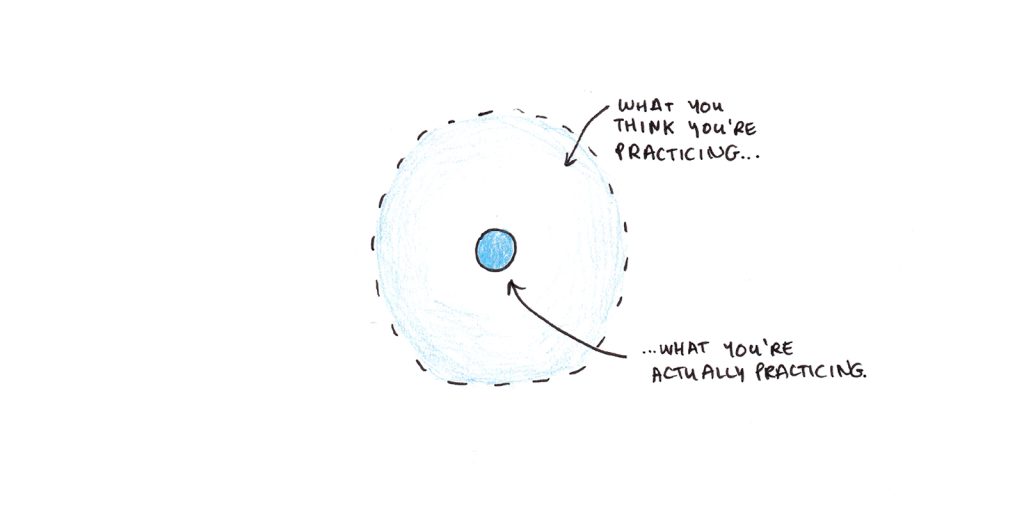

5. Skills are specific.

Transfer refers to enhanced abilities in one task after practice or training in a different task. In research on transfer, a typical pattern shows up:

- Practice at a task makes you better at it.

- Practice at a task helps with similar tasks (usually ones that overlap in procedures or knowledge).

- Practice at one task helps little with unrelated tasks, even if they seem to require the same broad abilities like “memory,” “critical thinking” or “intelligence.”

It’s hard to make exact predictions about transfer because they depend on knowing both exactly how the human mind works and the structure of all knowledge. However, in more restricted domains, John Anderson has found that productions—IF-THEN rules that operate on knowledge—form a fairly good match for the amount of transfer observed in intellectual skills.

While skills may be specific, breadth creates generality. For instance, learning a word in a foreign language is only helpful when using or hearing that word. But if you know many words, you can say a lot of different things.

Similarly, knowing one idea may matter little, but mastering many can give enormous power. Every extra year of education improves IQ by 1-5 points, in part because the breadth of knowledge taught in school overlaps with that needed in real life (and on intelligence tests).

If you want to be smarter, there are no shortcuts—you’ll have to learn a lot. But the converse is also true. Learning a lot makes you more intelligent than you might predict.

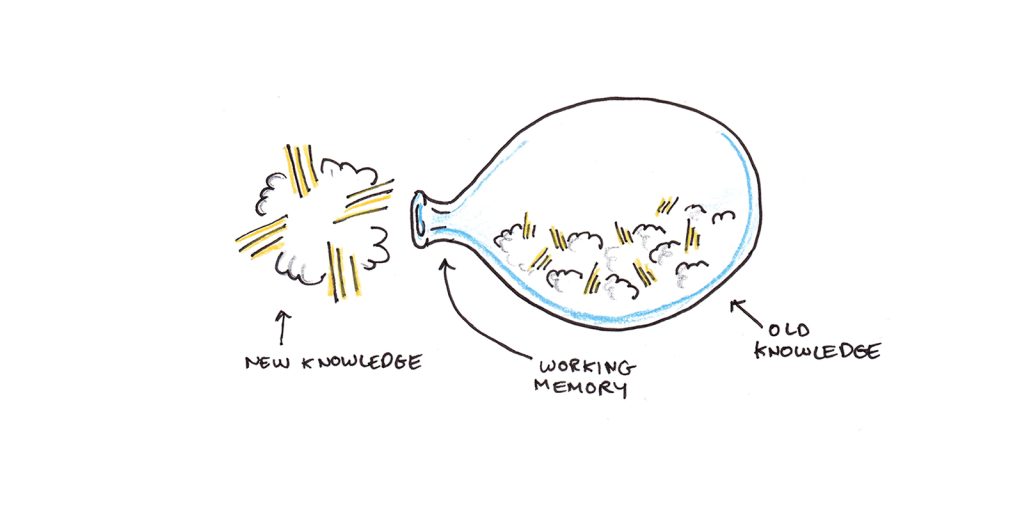

6. Mental bandwidth is extremely limited.

We can only keep a few things in mind at any one time. George Miller initially pegged the number at seven, plus or minus two items. But more recent work has suggested the number is closer to four things.

This incredibly narrow space is the bottleneck through which all learning, every idea, memory and experience must flow if it is going to become a part of our long-term experience. Subliminal learning doesn’t work. If you aren’t paying attention, you’re not learning.

The primary way we can be more efficient with learning is to ensure the things that flow through the bottleneck are useful. Devoting bandwidth to irrelevant elements may slow us down.

Since the 1980s, cognitive load theory has been used to explain how interventions optimize (or limit) learning based on our limited mental bandwidth. This research finds:

- Problem solving may be counterproductive for beginners. Novices do better when shown worked examples (solutions) instead.

- Materials should be designed to avoid needing to flip between pages or parts of a diagram to understand the material.

- Redundant information impedes learning.

- Complex ideas can be learned more easily when presented first in parts.

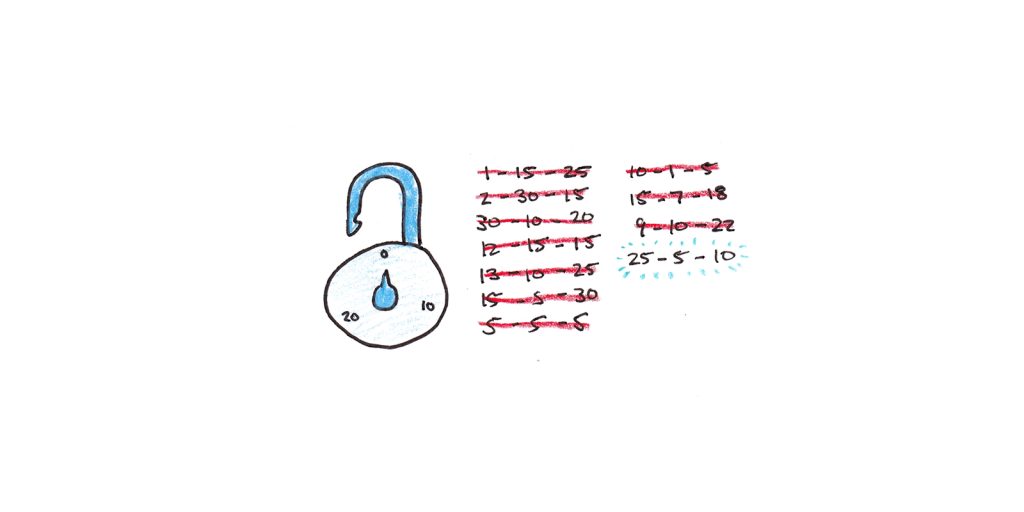

7. Success is the best teacher.

We learn more from success than failure. The reason is that problem spaces are typically large, and most solutions are wrong. Knowing what works cuts down the possibilities dramatically, whereas experiencing failure only tells you one specific strategy doesn’t work.

A good rule is to aim for a roughly 85% success rate when learning. You can do this by calibrating the difficulty of your practice (open vs. closed book, with vs. without a tutor, simple vs. complex problems) or by seeking extra training and assistance when falling below this threshold. If you succeed above this threshold, you’re probably not seeking hard enough problems—and are practicing routines instead of learning new skills.

8. We reason through examples.

How people can think logically is an age-old puzzle. Since Kant, we’ve known that logic can’t be acquired from experience. Somehow, we must already know the rules of logic, or an illogical mind could never have invented them. But if that is so, why do we so often fail at the kinds of problems logicians invent?

In 1983, Philip Johnson-Laird proposed a solution: we reason by constructing a mental model of the situation.

To test a syllogism like “All men are mortal. Socrates is a man. Therefore, Socrates is mortal,” we imagine a collection of men, all of whom are mortal, and imagine that Socrates is one of them. We deduce the syllogism is true through this examination.

Johnson-Laird suggested that this mental-model based reasoning also explains our logical deficits. We struggle most with logical statements that require us to examine multiple models. The more models that need constructing and reviewing, the more likely we will make mistakes.

Related research by Daniel Kahneman and Amos Tversky shows that this example-based reasoning can lead us to mistake our fluency in recalling examples for the actual probability of an event or pattern. For instance, we might think more words fit the pattern K _ _ _ than _ _ K _ because it is easier to think of examples in the first category (e.g., KITE, KALE, KILL) than the second (e.g., TAKE, BIKE, NUKE).

Reasoning through examples has several implications:

- Learning is often faster through examples than abstract descriptions.

- To learn a general pattern, we need many examples.

- We must watch out when making broad inferences based on a few examples. (Are you sure you’ve considered all the possible cases?)

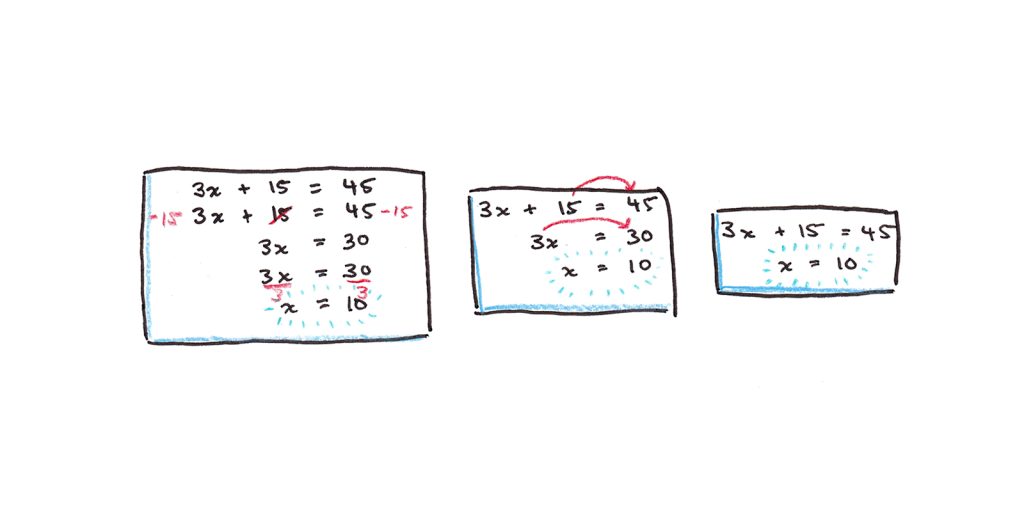

9. Knowledge becomes invisible with experience.

Skills become increasingly automated through practice. This reduces our conscious awareness of the skill, making it require less of our precious working memory capacity to perform. Think of driving a car: at first, using the blinkers and the brakes was painfully deliberate. After years of driving, you barely think about it.

The increased automation of skills has drawbacks, however. One is that it becomes much harder to teach a skill to someone else. When knowledge becomes tacit, it becomes harder to make explicit how you make a decision. Experts frequently underestimate the importance of “basic” skills because, having long been automated, they don’t seem to factor much into their daily decision-making.

Another drawback is that automated skills are less open to conscious control. This can lead to plateaus in progress when you keep doing something the way you’ve always done it, even when that is no longer appropriate. Seeking more difficult challenges becomes vital because these bump you out of automaticity and force you to try better solutions.

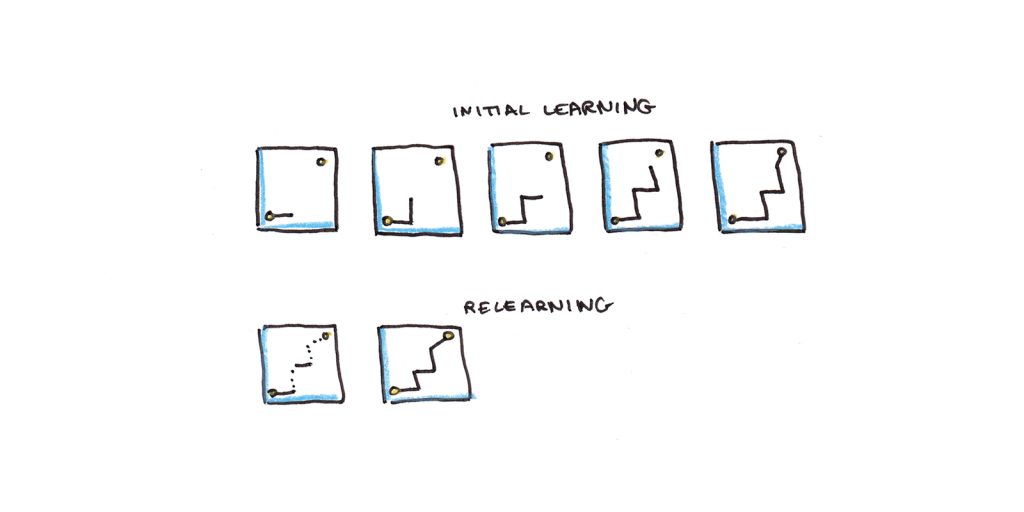

10. Relearning is relatively fast.

After years spent in school, how many of us could still pass the final exams we needed to graduate? Faced with classroom questions, many adults sheepishly admit they recall little.

Forgetting is the unavoidable fate of any skill we don’t use regularly. Hermann Ebbinghaus found that knowledge tapers off at an exponential rate—most quickly at the beginning, slowing down as time elapses.

Yet there is a silver lining. Relearning is usually much faster than initial learning. Some of this can be understood as a threshold problem. Imagine memory strength ranges between 0 and 100. Under some threshold, say 35, a memory is inaccessible. Thus if a memory dropped from 36 to 34 in strength, you would forget what you had known. But even a little boost from relearning would repair the memory enough to recall it. In contrast a new memory (starting at zero) would require much more work.

Connectionist models, inspired by human neural networks, offer another argument for the potency of relearning. In these models, a computational neural network may take hundreds of iterations to reach the optimal point. And if you “jiggle” the connections in this network, it forgets the right answer and responds no better than if by chance. However, as with the threshold explanation above, the network relearns the optimal response much faster the second time.1

Relearning is a nuisance, especially since struggling with previously easy problems can be discouraging. Yet it’s no reason not to learn deeply and broadly—even forgotten knowledge can be revived much faster than starting from scratch.

What are the learning challenges you’re facing? Can you apply one of these mental models to see it in a new light? What would the implications be for tackling a skill or subject you find difficult? Share your thoughts in the comments!

Footnotes

- These networks are trained via gradient descent. Gradient descent works by essentially rolling downhill. Correct knowledge is like the gently-sloping bottom of a steep canyon—the correct direction is down the canyon, but the sides are quite high. Unlike a three-dimensional space, as would describe a physical canyon, most networks are in an extremely high-dimensional space. That means any imprecision in the direction results in running up the side of the canyon. The result is that networks typically slosh around a lot before getting to the bottom of the long canyon. However, when you add any noise to the system, the “downhill” direction usually goes straight back to the optimal point.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.