Measuring productivity is hard. A lot of the form and (dys)function of working life can be derived from this fact.

Consider this perspective on Japanese salaryman work culture:

Your first obligation, in all things, will be to your company. You will work incredibly hard (90+ hour weeks barely even occasion comment) on their behalf. The company can ask you to head to a foreign office for three years without your wife and child beginning tomorrow, and you will be expected to say “Sure thing, when does my flight leave?”

…

There exist companies which don’t require their salarymen to work Saturdays. That is considered almost decadent for salarymen — the more typical schedules are either “2 Saturdays a month off” or “every Sunday off!” Even if you’re not required to work Saturdays, if one’s projects or the company’s situation requires you to work Saturdays, you work Saturdays. See also, Sundays.

I’ve spoken with people who have worked in similar environments. They tell me the long hours are mostly to show loyalty. The extra work done after the 90th hour is fairly low. Maybe even negative.

Contrast this example with a friend of mine. He’s a programmer, but works remotely. He technically works full-time, but rarely works for more than a few hours each morning. The rest of the day he takes off.

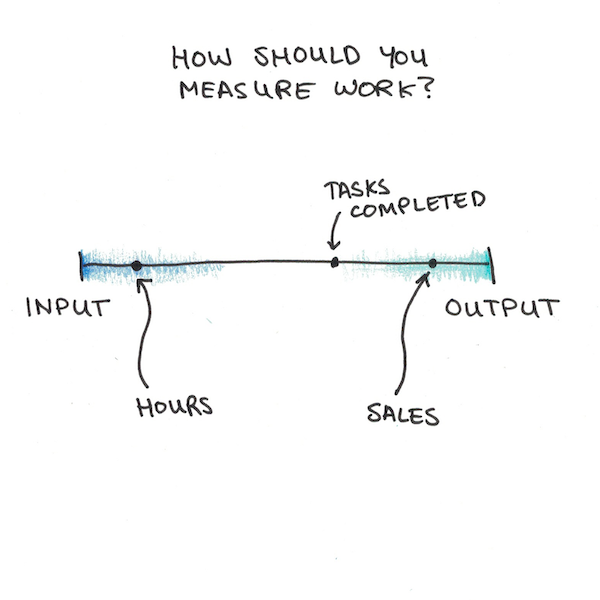

Measuring Productivity: Input or Output?

These two examples represent two extremes of evaluating productivity: input vs output.

The salaryman is (largely) judged on input. Loyalty, commitment and hellish hours. If he puts in the work, the work that comes out isn’t as important:

Incompetence at one’s job bordering on criminal typically results in one’s next promotion being to a division which can’t impact shipping schedules and has few sharp objects lying around.

In contrast, my friend is entirely judged on output. His work doesn’t track his hours. Given his flexibility, however, he’d better perform. Regularly failing to meet deadlines would get him fired (or, at the very least, a renegotiation of his unorthodox routine).

So which is better to measure: input or output?

The question might seem obvious: results are what matters, so let’s measure results. The problem is that results aren’t always so easy to track.

The Drawback of Only Judging Outputs

I’ve met a few consultants that have the following business model: I help you implement some idea for your business. If profits go up, you pay me a percentage. If they don’t, you don’t owe me anything. Win-win, right?

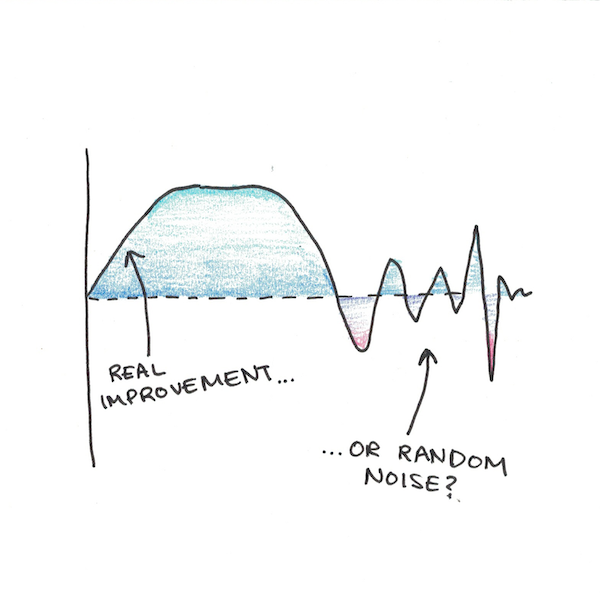

The trick is that businesses with volatile income will have many ups and downs. Most of that is random noise. Now imagine a consultant shows up with worthless advice—pure placebo effect. Half the businesses he consults go up, and he gets a hefty cut of the upside. Half go down, and he gets nothing. He makes a fortune, even though the advice is worthless.

This may sound paranoid, but random changes can often temporarily boost outputs. Early management theorists first noticed this when production levels improved both when lights were brightened and when they were dimmed on the factory floor. It turns out just having consultants there fiddling with the switch made people work harder.

This isn’t to say profit sharing is bad. It’s often great. Rather, I want to show why this type of pay-for-results scheme isn’t universal. We often judge inputs because outputs are noisy, hard-to-measure or easy-to-game.

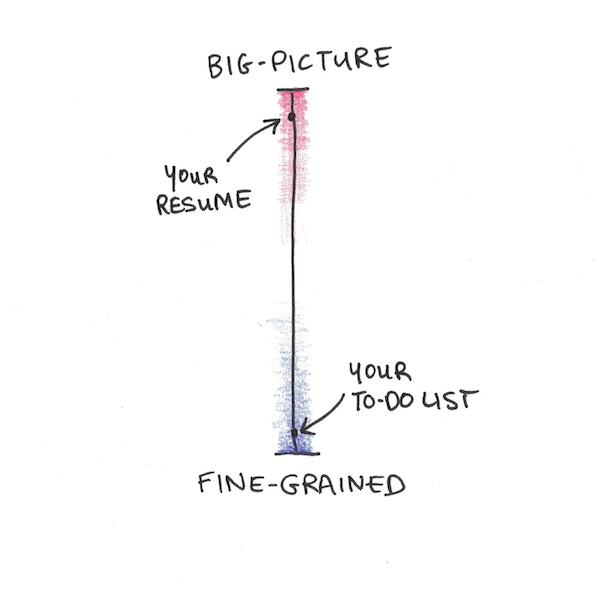

The Other Axis: Big-Picture and Fine-Grained

The problem with our pay-for-results consultant was that the payment comes too soon. What if we had them wait a year, to see if the improvements stuck?

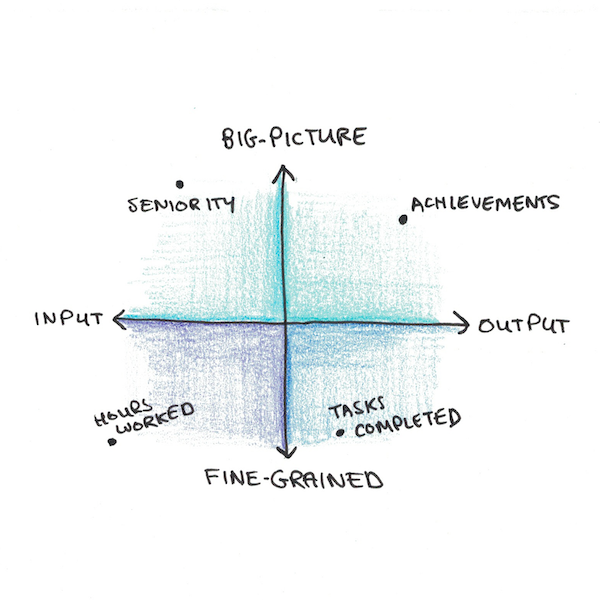

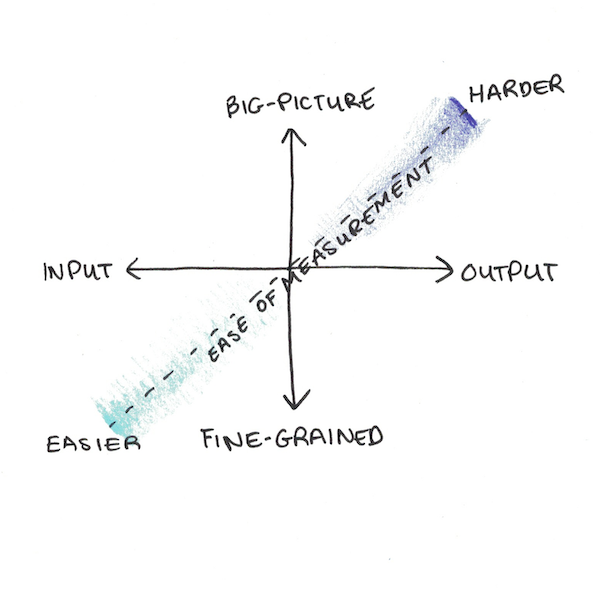

This suggests another dimension in measuring productivity, different from our first. On top of inputs and outputs, we can now look at the big picture or fine-grained details:

Big picture means, looking back over years or decades, how much did it make a difference? Fine-grained means tallying the minutes, giving an immediate measure of progress.

We can see that this axis is different from the first. Seniority is big-picture input. Lifetime return-on-investment is big-picture output. Hours worked is fine-grained input. To-do list items checked-off is fine-grained output.

Once again, we see a trade-off. Big picture is what we care about, but it’s slow. That great American novel, Moby Dick, only sold a few thousand copies during the author’s life. Sometimes the big-picture is only visible long after you’re around to witness it.

How Should You Measure Productivity?

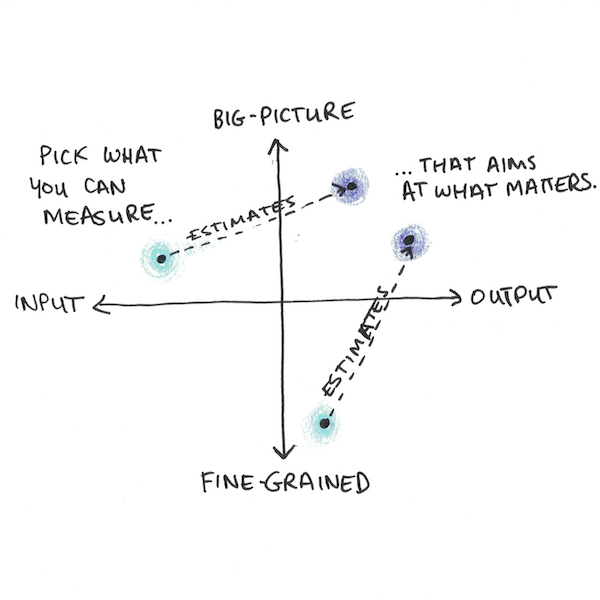

What matters most is often the hardest to track. Thus we’re often stuck measuring things we don’t directly care about, in the hopes that they point to something we do.

The solution has two parts:

1. Pick (a few) metrics that will estimate what matters.

These should be easy to measure and timely enough to give good feedback. The point of measuring things is to be able to make adjustments. If you need to wait until you’re retired to see how well you’ve done, it’s too late.

Tracking a few things makes it harder to game the system. If you reward only hours, you may wind up with a lot of overtime and not much useful work. But as long as you keep an eye on a few other areas of performance, hours worked isn’t always a bad estimate of how much work is being done.

2. Use meta-feedback to tune your short-term metrics.

Your big-picture output is harder and slower to measure. As a result, it often doesn’t make sense to use it for measuring your progress day-to-day. But it does serve a role in adjusting which of your short-term metrics you use to estimate progress.

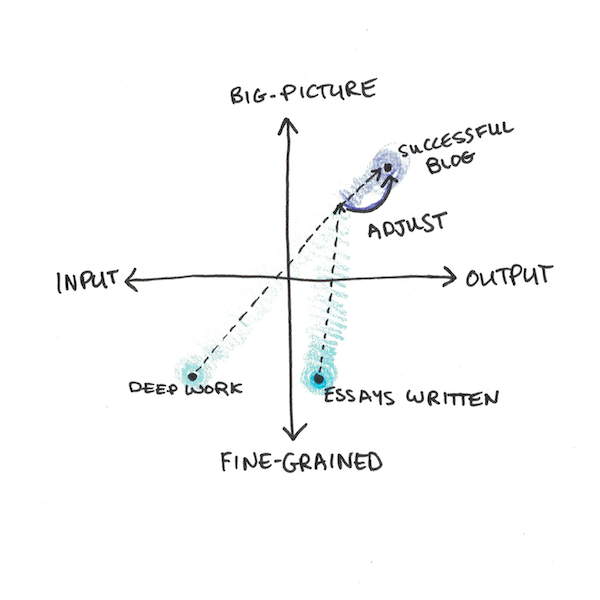

The problem is that measurements become incentives. This is true for yourself and for people you manage. At first, a metric may be neutral. But, over time, as the culture unconsciously adapts to incentives, people may find ways of meeting the metric without doing what made it matter.

Let’s say I start with “essays written” as a metric for my productivity as a writer. In the beginning, it’s pretty good—it tracks well the growth of my audience. But over time, I might hit my target by sacrificing research or editing. Quantity no longer generates quality, so the metric becomes less helpful.

However, tracking results over months or years, I might slowly adjust the weighting I give to these metrics to compensate. Now it’s not just “essays written” but also “hours of deep work” on each.

If I track the outcomes of each essay, I can see which kinds of essays tended to do better. This can help me decide whether the ideal weighting is more on “essays written” or “hours per essay”, depending on whether polished pieces or prolific production matter more.

Shifting Measurements is More Robust

This approach is dynamic, not static. Instead of relying on a fixed standard, you’re constantly tweaking what you measure.

Shifting what you’re measuring, even slightly, might seem to be a drawback. But there’s reasons to think it might make your work more robust.

This lecture on systems biology discusses a related problem: the evolution of modularity. If you simulate evolution on a circuit design to compute a common function, what you end up with is usually a mess. It computes what you want in Rube Goldberg-esque fashion. There aren’t the neat separation of functions like we see in actual living tissues or organs.

However, if you run the same simulation, but you wiggle the target being aimed at, you end up with the nicer modular solutions instead. Meta-feedback on what you measure, wiggling the targets slightly, stops you from reaching a fragile solution.

How you measure can impact your work in profound ways. An eye on hours can create a culture of ever-expanding work schedules. But results alone are tricky to measure and often gamed. The systems that end up succeeding are those that adapt.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.