Recently I read Bill Chen and Jerrod Ankenman’s The Mathematics of Poker. Using sophisticated game theory, the duo analyze poker setups to figure out the optimal betting strategy.

One thing that jumped out immediately was simply how aggressive the correct betting strategy can be. There are common setups where it makes sense to go all-in, regardless of what is in your hand.

This runs counter to a common stereotype—that the people who know the most play it safe. It’s out of ignorance that most people make risky bets. In poker at least, it’s often the bad players who slowly lose money by missing out on bets that are slightly in their favor.1

Poker isn’t the only place I’ve noticed this pattern, however. The psychologists I know tend to be more likely to use mind-altering substances than average. Those with medical backgrounds tend to be more comfortable with drugs and vaccines. Biologists tend to think GMOs are safe, etc.

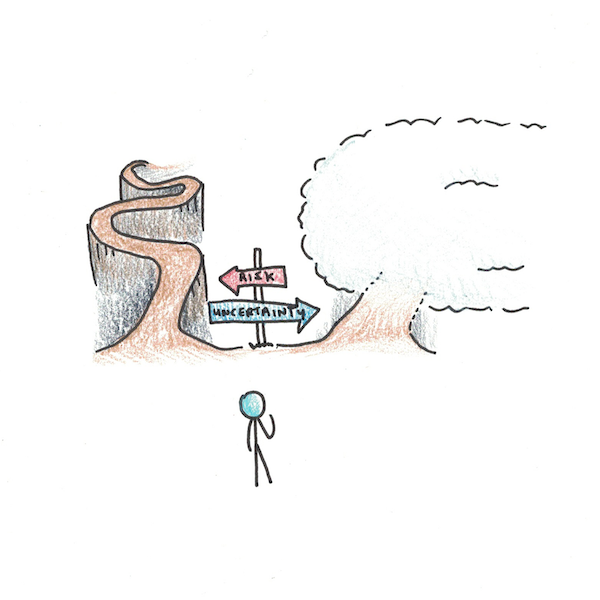

My point isn’t that we should all be taking more risks, because smart people (or mathematical analyses) take more risks. Rather, I think the pattern to note is that as you understand a system better you convert uncertainty into risk, and in doing so, are able to take on smarter risks (and avoid foolish bets).

Risk and Uncertainty

People typically use the words “risk” and “uncertainty” interchangeably. Technically speaking, however, they aren’t the same thing.

Risk is precise and quantifiable. Take flipping a coin. You can’t know whether it will come up heads or tails, but assuming you trust the coin, you have a fair idea about your probability of winning.

Uncertainty, in contrast, occurs when the underlying system producing randomness is not understood. You’re given a business opportunity to invest in that you know nothing about. Here the problem isn’t calculating whether the risk is worth it, but the fact that you have no idea what the risk even is.

Knowledge can convert uncertainty into risk. If you learn about probability, it’s easy to take classic casino games and see that they tend to be a bad deal for your money. You lose a little on average. But, if you did see a spot where the odds were in your favor, you could make a much larger bet.

While few domains of real life reach the mathematical certainty of card games, knowing more about a subject converts many uncertainties into risks. If you don’t know anything about physics, for instance, the idea that WiFi might cause cancer because it uses microwave radiation sounds plausible. Yet, if you know a bit more you can see that this worry is silly—microwaves are fantastically weaker than the light from an average lightbulb.

Coping with Uncertainty

The way to cope with risk is to calculate. Figure out the odds and you can optimize your decision.

This doesn’t work with uncertainty, however. Even if you were competent with the math, uncertainties don’t have any numbers to calculate. You can estimate, of course, but sometimes the ranges are simply too large to make an intelligent guess.

Since our ancestors largely lived in an uncertain, as opposed to a risky, world, our brains have evolved coping mechanisms to deal with uncertainty.

One of those is to maintain the status-quo. Since many areas of uncertainty have more downside than upside (should you eat this unknown mushroom?), the policy of only doing things others have done in the past makes sense.

This tends to restrict people to repeating experiences they’ve had personally, or others they know closely have experienced. As a way of reducing the negative effects of uncertainty, this makes sense. But it also seems like it can create a trap where you avoid good opportunities just because nobody you know personally has tried them.

Uncertainty and Framing

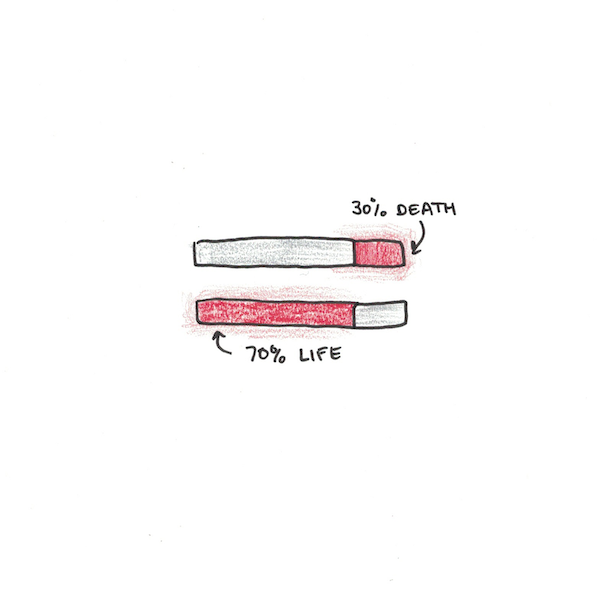

Uncertainty tends to favor certain framings of the risk. Nobel laureate Daniel Kahneman’s work on prospect theory finds that people are often willing to take riskier actions to avoid losses than to make gains—even when the situation itself is identical (just differently described).

From the perspective of risk this makes no sense. A 30% chance of allowing a death and a 70% chance of saving a life are just different ways of describing the same thing. But from the perspective of uncertainty, such an approach appears more rational. What matters is where the status-quo is being set—is the presumption that this person will live, and the uncertainty is taking an action that might cause death? Or is the presumption that this person will die, and the uncertainty is that this action to save them might not work?

When uncertainty is involved, whatever is taken as the “default” will exert an irrationally strong influence on the outcomes. Sometimes that default is pretty good, but in other cases it’s terrible and taking more chances is actually wise.

Risk and Arrogance

Once you understand a system, you can convert some of the uncertainty into risk. Sometimes you’re able to do this completely, as with the probabilities of a poker game. In other cases, you can restrict your ranges of uncertainties to a level where making a prudent calculation is possible.

More knowledge allows you to make more precise bets. These bets will often look overly aggressive or foolish from the perspective of someone without that knowledge. Conversely, a smarter person also avoids the foolish status-quo bets that ordinary people make to their detriment.

Belief in knowledge can sometimes be misplaced. You might think you understand a system you actually don’t. This is a major takeaway of Nassim Taleb’s body of work—people often think they understand systems that they really don’t. This seems particularly true in finance where bad incentives and mathematics PhDs combine to create an epistemic arrogance that can explode spectacularly.

But while Taleb is correct that we can overestimate our ability to transmute uncertainty into risk, I don’t take the position of the extreme skeptic that argues that all understanding is ultimately an illusion. Mistakes in any gamble run both ways. Betting when you should fold and folding when you should bet are both errors. Similarly, believing you know when you’re actually clueless and pretending you cannot know when knowledge is possible are both errors. The goal should be to minimize errors overall, not simply pick only from one flavor.

Taking Smarter Risks

Uncertainty casts a layer of fog over many of our decisions. This makes us tread cautiously, as if any step might take us over a cliff. Broadly speaking, this is wise, as falling down cliffs is really bad. Yet most of the decision terrain isn’t nearly so fraught. Things that look risky often aren’t, and things that seem ordinary and safe often are!

The solution is to learn more. Spend more time trying to understand things. As your knowledge grows, the fog recedes and the treacherous paths separate themselves from the gentle passages.

Footnotes

- This goes both ways, of course. Poker, is zero-sum, or slightly negative sum once you consider the cut for the house. Which means for every person who bets too weakly, there is an opposite error of being overly aggressive. My point is simply that the loss from failing to bet aggressively is less obvious than the loss from mistakenly betting too much.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.