How much do we learn simply by doing the things we’re trying to get good at? This question is at the heart of a personal research project I’ve been working on for several months. Readers can note some previous entries I’ve already written on this project.

My original intuition was that we get good at what we practice, and skills are often quite specific. This suggests doing the real thing—focusing precisely on what we want to get good at—is underrated.

I still think this view holds. But this simple statement belies a lot of complexity. There’s a lot of fascinating research showing which conditions lead us to get better through direct practice. These, in turn, have shaped my views about the best way to get good at complex skills.

Here are some of the research findings I’ve uncovered.

1. Complexity and Cognitive Load

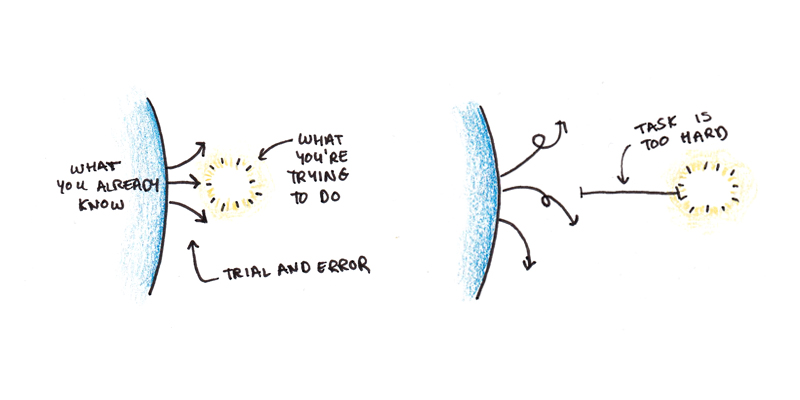

One of the major mediators of the “learning by doing” thesis is task complexity. Absent instruction, we need to rely on what cognitive scientists refer to as “weak methods” to solve problems. These include trial-and-error and means-end analysis. There’s research showing that, at least in some cases, an overreliance on this kind of “figuring things out” can make it harder to learn.1

The obvious problem with this is that if something is too hard, you simply can’t do it. If you can’t do it, then “practicing it” is meaningless. This problem can arise in some educational approaches. When students are given overly complex problems, they may default to “weak methods” to solve them and fail to actually practice the knowledge and skills they’re supposed to learn.

However, there’s also a more subtle problem. Even if you can figure out the problem, the working memory required for solving it may be so great that you don’t encode the procedure well.

This is the conclusion behind John Sweller’s research on cognitive load theory. Experiments show that seeing lots of examples tends to beat problem solving in a head-to-head comparison for algebra problems.2 However, the result flips once you figure out the pattern, and problem solving becomes more useful.3

My views on this issue seem to correspond most closely to Jeroen J. G. Van Merriënboer and Paul Kirschner. Their instructional design guide, Ten Steps to Complex Learning, argues for the importance of starting with real tasks to be learned. But, they also stress the importance of having lots of support and structure in the early phases of learning to avoid the problems Sweller’s research highlights.

2. Prerequisite Skills and Concepts

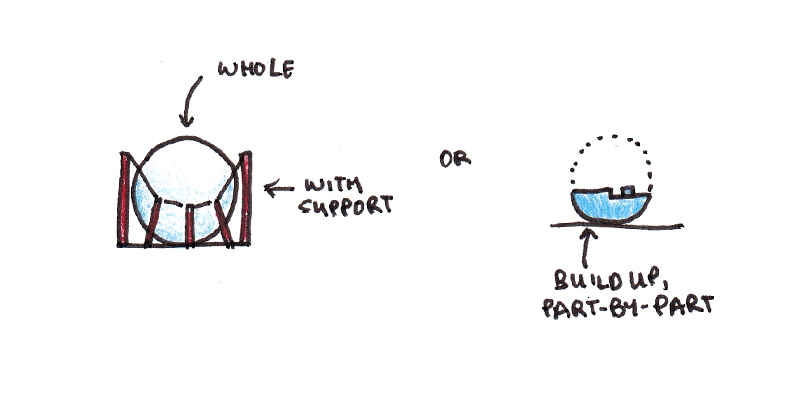

A related issue is how much weight should be given to learning the fundamentals versus doing the whole task? This, it turns out, is a contentious issue in the research. Some argue that presenting the entire task from the outset is necessary to integrate skills and knowledge properly. Others argue that it’s essential to start from the basics first.

A representative of the former view would be Allan Collins and John Seely Brown’s cognitive apprenticeship. The idea here is to present students with the real situations and give enough assistance and structure so that the cognitive load isn’t overwhelming.

An alternative approach, Direct Instruction, was developed by Sigfried Engelmann and Wesley Becker. In this theory, skills are built from the bottom-up, with careful attention paid to the full range of component skills to minimize later issues with transfer to the whole task. Direct Instruction performs well in educational settings, despite being unpopular for its perceived “assembly line” approach to teaching.

It seems clear that both the structured whole and part-to-whole approaches can be successful. But the details matter quite a bit. Presenting information in a full context, even with appropriate scaffolding, may make it harder to focus attention on what is to be learned. This seems to be the critique of problem-based learning in medicine, where students are taught all their medical knowledge in the context of patient cases. It might be harder to learn how the body works, if it’s always presented within the context of individual patients.

However, the research on part-to-whole learning is not uniformly positive.4 Many skills must change when they are integrated with other tasks. When component skills are separated in time, practicing each skill by itself can work well (e.g., parallel parking while learning to drive a car). However, when skills need to be performed at the same time (e.g., learning to shift gears while also steering the car), practicing each skill alone is often less effective than simply practicing the whole task.

What’s the right approach? I think it depends. If you were trying to learn quantum mechanics and didn’t know algebra, you’d be in for a hard time trying to start there. But many educational programs pretend there are prerequisites that don’t actually exist in practice—learning to speak Chinese, for instance, doesn’t actually require learning to handwrite, and teaching it that way may throw up unnecessary barriers for someone who only wants to speak.

3. Deliberate Practice and Plateaus

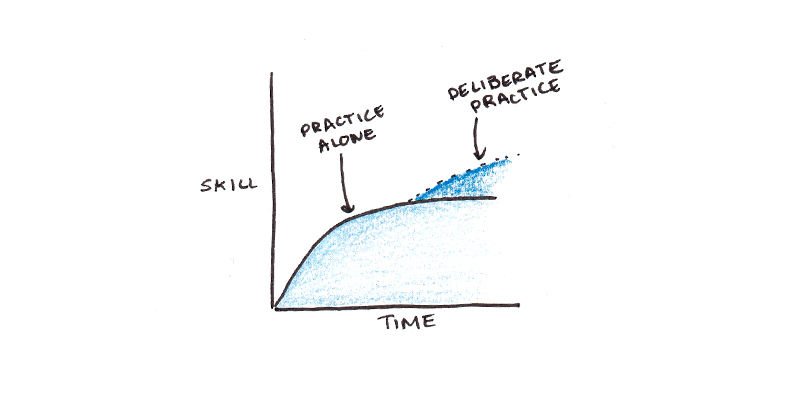

Anders Ericsson’s work on deliberate practice makes a distinction between performing a skill and practicing it. His research found that elite performers spent more time engaged in the careful act of improvement, rather than merely using the skill a lot.

Examples of this include the link between achievement and the quantity of deliberate practice in violin players, chess players benefiting more from increases in “serious study” than in extra tournament play, and the decline in skill medical practitioners experience the longer they have been away from school.56

Some research challenges the deliberate practice concept and its relevance to professional skills.7 But, for the moment, let’s assume that Ericsson’s observation about the nature of improvement is correct. What does that say about my original idea that we get good by doing the real thing?

In some ways, there’s no contradiction, only a matter of scope. You get good at what you do. If you play basketball, you’ll get better at basketball. If you practice shooting layups, you’ll get better at shooting layups. If you can practice a few hundred layups in an hour doing drills, and only a handful of times during a game, you’ll get better faster doing drills. The drilled layups may be harder to integrate than the layups played during the game. Still, the rate of practice is much higher in the drills, so the efficiency may be worth the trade-off.

A secondary factor seems to be the incentive structure created by the environment. When we enter a new environment, our performance often quickly reaches adequacy and then levels off. Once our performance is “good enough,” we stop actively seeking ways to improve it.

One route to improvement would be to disengage from the performance requirements of the job and focus exclusively on practice. But it depends on accurately identifying critical skills and creating conditions for practice. This is hard to do for nebulous skills, and, I’d argue, a lot of what we’re trying to get good at is in ill-defined domains of performance.

Another strategy is to change your environment so that there are increased pressures for quality. As a writer, I found writing a book with a traditional publisher (and fixing a specific goal for the kind of book I wanted to write) did more for me than writing “drills.” Since pursuing real challenges also has benefits beyond simply getting better at the skill itself, I tend to favor it over isolated practice. However, there are probably cases where isolated practice is necessary.

4. Background Knowledge

Knowledge tends to be more skill-like than we may assume. Research on transfer-appropriate processing, for instance, shows that we encode the same information differently, depending on how we think we’re going to use it. Work on the testing effect and retrieval practice shows that practicing remembering strengthens memory more than repeatedly looking at the content.

However, there’s a lot to be learned from reading and listening. As a scientist, you would be at a significant handicap if you only performed experiments and never read the surrounding literature! Similarly, understanding the background knowledge that underpins a domain is enormously helpful.

The question that interests me is: how much background knowledge should you obtain before practicing? Our experience of school suggests the answer is a lot. In school, you spend years studying before you “do” anything that actually resembles the real thing.

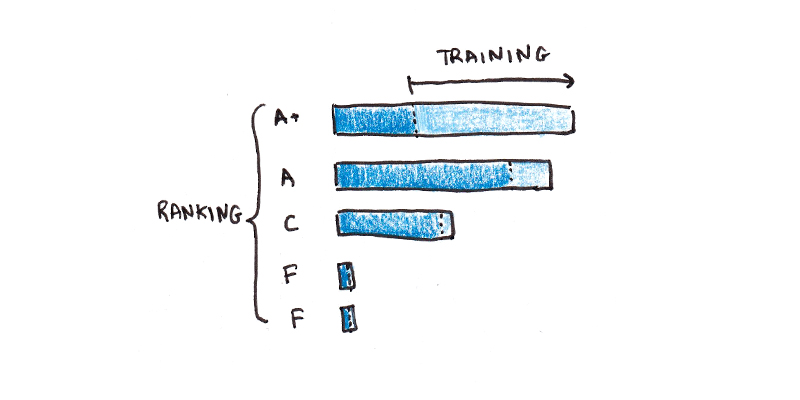

I think this is more an artifact of our educational system than an ideal path to mastery. Schools serve two goals, teaching useful background knowledge and skills, and sorting students by ability. This sorting function may be the more influential force in how our educational institutions actually work.

To see why, consider two hypothetical training activities: one with high fidelity to the real activity, but low ability to grade and sort students; and another that’s contrived and unrealistic, but is easy to rank. If the goal was training, you’d want more of the first type. If the goal was sorting, you’d want more of the latter. Given that many of our educational institutions lean so heavily on the latter, that’s a good indicator of where priorities lie.

5. How Common Are Tasks With Greater Than 100% Transfer?

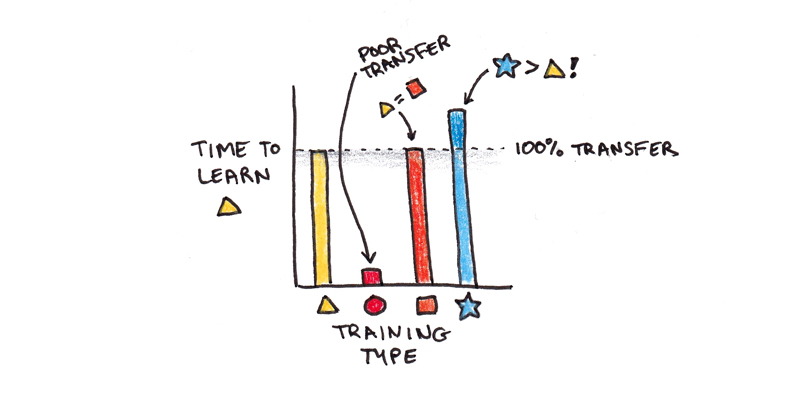

Transfer of learning is the idea that if you learn task A and then learn task B, how much faster will you learn task B because you already know A? Zero transfer means that time spent on the first task offered no help for the second. One-hundred percent transfer means practicing A was just as good as practicing B. Greater than 100% means that, to get good at B, you shouldn’t do B! You should practice A instead since it will be more efficient.

The research on transfer shows that most tasks are between 0 and 100%. In other words, learning A can occasionally help with learning B, but it’s usually worse than doing B directly. In cases where A and B differ considerably, the transfer tends to be even lower than most people think.

In some cases, the transfer is much less than you’d naively expect. Learning to do algebra problems of one type, for instance, transfers surprisingly poorly to learning ones of a slightly different kind.8

However, tasks with greater than one-hundred percent transfer do exist. For example, doing a simplified version of a task can result in better learning than the most complex version due to cognitive load. Thus learning is better when it “ramps up” rather than diving right into the most challenging scenario to start.

But what about less obvious cases? One that has been proposed to me comes from language learning. Beyond a certain point, the argument goes, reading and listening in a language are better practice activities for speaking ability than speaking. If this is true, then this would be a prominent counter-example of my bigger idea.

Supposing this statement is correct, what might explain it? One possible answer relies on background knowledge and cognitive load. Reading in a language exposes you to a greater variety of vocabulary than you might acquire in conversation. Reading also reduces cognitive load—you can stop to look up words—so that it is easier to learn underlying patterns.

How general is this phenomenon of greater-than-100% transfer? I suspect most cases fall under issues of task complexity and exposure to background knowledge. However, even in the case of language learning, it’s certainly possible to be a fluent reader or listener and be unable to speak. So even when doing something else may benefit skill acquisition more than direct practice, the amount of direct practice should probably never go to zero.

6. Are Some Skills Not Learnable?

A final wrinkle in my overall picture is that some skills may not be learnable through practice at all. James Voss and Timothy Post have found that experts outperform novices in certain categories of prediction tasks but grossly underperform simple linear regression models.9

The assumption that we get good at what we practice presumes improvement is possible. But it’s probably the case that many skills simply aren’t improvable. The cue-response relationship is too complicated and there aren’t any training methods that properly break them down into a sequence of learnable steps.

Some skills are difficult or impossible for humans to learn, but mathematical models can do them. In these cases, the key skill is learning to use the mathematical model. As machine learning advances and more data become available, I suspect we will develop more of these machine-human hybrid skills.

In many ways, this finding isn’t entirely surprising. I don’t know how to calculate cube roots with just a pencil and paper, but I can use a calculator to do it immediately.

Much of skill learning also involves figuring out which tools that can improve your performance. For millennia, technology has augmented our physical skills. More recently it has replaced the “lower” cognitive skills of memory and algorithmic calculation in many instances. However, we might be entering an age where “higher” skills such as judgement, taste and decision-making may be relegated to machines or computer-human hybrids.

What are the Practical Implications for Self-Improvement?

My general advice remains: do the thing you’re trying to get good at. But there are useful considerations to keep in mind:

- Instruction plus practice beats practice alone.

- If the task is too difficult, seek lots of examples and find simpler versions of the task to start.

- Broad background reading and study are helpful. But they can’t replace doing the real thing.

- Isolated practice of component skills can be effective. But these skills are often subtly different when doing the real thing. Thus, a good habit is alternating between whole and part practice.

- Cases where transfer exceeds 100% can exist. Usually, they’re because the alternative training task exposes you to information you can’t discover through practice, or because it reduces cognitive load enough that it aids in knowledge acquisition. But, as with the previous point, alternating between these activities and the real thing is probably helpful to avoid integration problems later.

- Some skills may not be learnable through practice. In these cases, relying on tools that remove the need for direct improvement may beat extensive experience.

As I continue researching this topic, I’ll do my best to share what I find with you!

Footnotes

- Paul A. Kirschner, John Sweller, and Richard E. Clark, “Why Minimal Guidance During Instruction Does Not Work: An Analysis of the Failure of Constructivist, Discovery, Problem-Based, Experiential, and Inquiry-Based Teaching,” Educational Psychologist 41, no. 2 (2006): 75-86, https://doi.org/10.1207/s15326985ep4102_1.

- John Sweller, “Cognitive Load During Problem Solving: Effects on Learning,” Cognitive science 12, no. 2 (April-June 1988): 257-285.

- Slava Kalyuga, “Expertise Reversal Effect and Its Implications for Learner-Tailored Instruction,” Educational Psychology Review 19 (December 2007): 509–539.

- Christopher D. Wickens, Shaun Hutchins, Thomas Carolan, and John Cumming, “Effectiveness of Part-Task Training and Increasing-Difficulty Training Strategies: A Meta-Analysis Approach,” Human Factors 55, no. 2 (April 2013):461-470, https://doi.org/10.1177%2F0018720812451994

- Niteesh K. Choudhry, Robert H. Fletcher, and Stephen B. Soumerai, “Systematic Review: The Relationship Between Clinical Experience and Quality of Health Care,” Annals of Internal medicine 142, no. 4 (February 2005): 260-273, https://doi.org/10.7326/0003-4819-142-4-200502150-00008.

- K. Anders Ericsson, , Ralf T. Krampe, and Clemens Tesch-Römer, “The Role of Deliberate Practice in the Acquisition of Expert Performance,” Psychological review 100, no. 3 (July 1993): 363-406.

- Brooke N. Macnamara, David Z. Hambrick, and Frederick L. Oswald, “Deliberate Practice and Performance in Music, Games, Sports, Education, and Professions: A Meta-Analysis,” Psychological Science 25, no. 8 (August 2014): 1608–1618, https://journals.sagepub.com/doi/10.1177/0956797614535810

- Stephen K. Reed, “A Schema-Based Theory of Transfer,” in Transfer on Trial, eds. Douglas K. Detterman and Robert J. Sternberg (Norwood, New Jersey: Ablex Publishing Corporation, 1993) 39-67.

- James F. Voss and Timothy A. Post, “On the Solving of Ill-Structured Problems,” in The Nature of Expertise, eds. Michelene T. H. Chi, Robert Glaser, and M. J. Farr (Hillsdale, NJ: Lawrence Erlbaum Associates, 1988), 261-287.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.