recursive.

Recursion is the algorithmic equivalent of Matryoshka dolls. The same process nested inside itself again and again. This may sound complicated, but we use

recursive processes all the time.

To see what I mean,

click on any text underlined like this.

recursive processes all the time.

Consider trying to clean your house. This is a big task, so you might decide to informally operate with the logic, “If the cleaning task is too big, break it into sections and clean each section.” Following this, you decide to split it up into cleaning the kitchen, living room, bathroom, bedroom and garage. Cleaning the bathroom is still a large task, so you might repeat the recursive procedure and split it into cleaning the bathtub, mirrors, toilet, sink and floors.

This is a trivial example, but recursion can go deep. Understanding a difficult idea might have dozens of nested layers of explanation to get it completely from scratch.

Explanations are recursive because, in order to explain a big idea, you have to explain the pieces that support that idea. To understand those pieces, you have to explain their pieces, and so on. Nested Russian dolls.

click on any underlined text and it will expand the explanation.

Refreshing the page will re-collapse the article. You can selective collapse any expanded explanation by clicking on the little superscript like this one just to the right of this period.

Knowing what you know is called

metaknowledge.

metaknowledge. How many times have you showed up to a test, thinking you know the material, only to be baffled by difficult questions? Many students who don’t understand the problem of metaknowledge are quick to cast blame on an unfair exam or teacher. After all, they think they know the material, so how could they possibly fail?

With facts, metaknowledge is relatively easy. If you want to convince yourself you know the capital of France, you think Paris, and

convince yourself you have the right answer.

The Problem of Metalearning

Metaknowledge is often one of the hardest parts of learning something new. It isn’t simply that you must acquire new facts, concepts and skills, but it might also be unclear exactly what is missing. Leo Tolstoy explained it best:“The most difficult subjects can be explained to the most slow-witted man if he has not formed any idea of them already; but the simplest thing cannot be made clear to the most intelligent man if he is firmly persuaded that he knows already, without a shadow of a doubt, what is laid before him.”It’s not what you know, but what you know that just ain’t so, that gets you into trouble.

that gets you into trouble.

that gets you into trouble. (This previous sentence is often attributed as a quote to Mark Twain, but it turns out he never said it. The closest we get is a related quote by another 19th-century humorist, Josh Billings.)

Interestingly, confidence in knowing that you know and actual knowledge can often be inversely correlated. The Dunning-Kruger effect is a phenomenon where those with less skill or knowledge, falsely believe themselves to know more than

those with greater skill.

those with greater skill.

As Bertrand Russell famously stated, “The problem with the world is that the stupid are cocksure, while the intelligent are full of doubt.”

The Dunning-Kruger Effect

The original studies for the effect were conducted by David Dunning and Justin Kruger at Cornell. They found that participants would over-estimate confidence for a variety of skills such as reading comprehension, medicine, driving, chess or tennis. The duo suggested that the reason for this overconfidence came down to metaknowledge. When participants lacked skill, they simultaneously lacked the ability to assess their own skill. Only by being exposed to training for the skill, did participants begin to realize their own inadequacies. Lest the tone of this effect sound accusatory, I believe I’ve also been a victim to this effect before. I’ll often believe I have a reasonable grasp on a topic or skill, until I start learning it more deeply. Then, as I learn more and more, I also become aware of how much I don’t know and the depths of expertise that others possess that I only vaguely understood in the beginning. Every answer itself asks new, previously unseen questions. Knowledge expands, but the space of perceived ignorance expands even faster.Do You Know Your Facts?

convince yourself you have the right answer.

Factual knowledge is easier because the procedure of self-testing gives a fairly reliable cue for whether you possess the knowledge. However, even here, metaknowledge can be incorrect if a different, less reliable procedure is used.

Many students, for instance, don’t check their factual knowledge with self-testing. Instead, they use activities like re-reading notes to convince themselves they understand the material. This creates a cue that the knowledge was effectively recognized, but not

effectively recalled.

Understanding, however, is different. This is because understanding isn’t all-or-nothing. You may

feel you know a little, but not enough.

effectively recalled.

Recall vs Recognition

Recall and recognition are different cognitive processes.

different cognitive processes, but there is debate about whether they are different aspects of the same mechanism (e.g. recognition is just weaker recall, or recognition is a type of recall) or whether they are different mechanisms (e.g. recall involves cognitive processes which aren’t used in recognition).

Although researchers debate whether they rely on the same or separate mechanisms, it is the case that, under normal circumstances, recognition is easier than recall. Convincing yourself that you’ve seen something before, when presented it, is much easier than having to search for it given a prompt that does not contain the answer you’re searching for.

Consider our capital city example once more. Now, imagine if, instead of Paris, I had asked you the capital of Hungary. Many North Americans would rightly guess Budapest right away. But, many others might stumble, falsely giving the name of another eastern European capital (Prague or Belgrade), or even blank entirely. However, if I asked whether Budapest *is* the capital of Hungary, many more people would quickly assess that this is correct.

What is the Relationship Between Recognition and Recall in the Brain?

Research in cognitive science typically proceeds in the following fashion: someone suggests a model of a system trying to capture some important aspects of how humans think and learn. This model is then compared against experimental evidence which either implies or refutes the model. The state of cognitive science is nascent enough that many fundamental questions of how the brain does what it does are still open to competing interpretations. The difference between recall and recognition is a great example of this. In the research on the relationship between recall and recognition two important models are generate-recognize models, proposed by Anderson, Bower, Kintsch and others; and Tulving’s synergistic ecphory. The generate-recognize model states that recall is a more elaborate process that itself includes recognition. You first generate candidate information (generate) and then you recognize whether or not it is correct. Experiments seem to offer empirical support that recall can, in fact, be estimated by separately measuring these two different mechanisms.

measuring these two different mechanisms.

Tulving and Thompson, however, have a rather strong critique of this. They contend that if recall itself includes recognition, then it would be impossible to recall an item that you couldn’t recognize. Their experiments, however, show that, under special circumstances, you can do exactly that–have subjects be able to

recall an item without being able to recognize it.

Generate-Recognize Models

Bahrick (1970) investigated the two-stage approach by having an experiment with three different groups. One group was asked, after reading a list of words, to generate as many words related to a given category as possible. This gave a rough percentage of successful generation for words. A second group was asked to recognize those words from a larger bank of words of the same category. This gave a percentage of successfully recognized words. Finally, a third group was asked to freely recall the words from the original list in that category. This experiment showed that the free-recall condition was related to the multiplied probabilities of the two stages independently.Tulving’s Critique of the Two-Stage Approach

recall an item without being able to recognize it.

To establish this effect, they used an ingenious experimental design. Like most memory experiments, this involved subjects learning a list of words paired with cues (e.g. COLD-ground, KNIFE-pen). However all of the to-be-remembered words also happened to have a strongly-associated word (e.g. COLD-HOT, KNIFE-FORK). The experiment then proceeded in three stages:

Their alternative model is that recall and recognition are not actually separate processes, but different variations of the same underlying process, which they call

synergistic ecphory.

- Subjects first learned the words with their cues (COLD-ground).

- Subjects were presented a list of the words which included the strongly-associated words (HOT, FORK). These were not words given earlier, but, in many cases the subjects nonetheless produced many of the words from the original list. Subjects were asked to tick next to words that were from the original list. Invariably, recognition that some of the words produced were from the original list was poor.

- After, subjects were given their original cues and asked to recall the words (e.g. ______-ground, _______-pen). Recall, here, was actually better than recognition, with subjects recalling more words than they recognized in the previous step.

synergistic ecphory.

Synergistic Ecphory

The term “ecphory” comes from a Greek word which roughly means “to be made known.” In Tulving’s theory, memories are stored in combination with some of the information about the event of the original encoding. This includes the cue or cues that would be necessary to retrieve them. In Tulving’s account, this offers a single mechanism for explaining both recall and recognition. Recognition is just a more specialized type of recall where the cue needed to retrieve the knowledge, is the knowledge itself. Tulving can then explain why recognition is normally easier than recall, because, under normal circumstances a cue that is the information itself is a really good cue!Why Recognition is Easier Than Recall

Why Metaknowledge is Much Harder with Explanations Than Facts

feel you know a little, but not enough.

The result? People often think they

understand things much better than they actually do.

Feeling of Knowing

Researchers have actually have a special term for the feeling you have that you know something, creatively called feeling of knowing or FOK. One of the first questions researchers had about FOK was where it came from. What it just a direct sense of memory strength? In other words, were subjects directly accessing memory engrams and using the strength of those to determine whether they knew something? Through some ingenious experiments, the answer to that question appears to be no.

appears to be no.

In an experiment by Benjamin, Bjork and Schwartz (1998), researchers first asked subjects to answer easy questions. They then asked subjects how many of the answers they could recall freely afterwards if they weren’t presented the questions again. The more questions subjects initially got correct, the more they thought they would later recall. However, this turned out to be negatively correlated with free recall.

This shouldn’t suggest that FOK is always fallible. In many normal circumstances it does predict your ability to know something later. This research merely implies that FOK is not based on direct access to the strength of a memory.

FOK isn’t based on direct-access to the memory strength but by using various

mental cues to suggest whether the information is available.

Where Does Feeling of Knowing Come From?

mental cues to suggest whether the information is available.

These mental cues are often related to fluency of processing. That is to say, if thinking about something is easier, you’re more likely to have higher FOK than if thinking about it is hard. Since you don’t actually know how strong a memory is that you aren’t able to access directly, you base your prediction off of something you do have access to, namely, ease of processing.

As a clue to metaknowledge, ease of processing isn’t so bad. However, it can create difficulties when greater ease actually results in worse learning.

A great example of this was Hertzog, Dunkosky, Robinson and Kidder’s 2003 study where subjects memorized pairs of words

using visual imagery.

using visual imagery.

The method is fairly simple. Take two words you want to associate. This could be a foreign language word and its translation, a medical term and a plain English equivalent, or even two random words as in the case of these experiments. Now, if both words are easily visualizable, simply combine the images of those words in a random, crazy picture. (Duck > Boat might have a giant duck rowing a boat)

If the words aren’t visualizable you can use a stand-in with an abstract symbol (a clock for time) or a “sounds-like” substitute (“shave an ear” for the French chavirer) using visual imagery.

Subjects assumed that the word pairs that they quickly formed images for would be the most likely to be remembered, but it

was actually the opposite.

was actually the opposite. This is most likely because longer processing, while being more difficult, also led to subjects holding the mental image longer, which contributed better to recalling the pairing later.

understand things much better than they actually do.

Overconfidence of understanding is particularly strong with things which are familiar,

like bicycles or can openers.

The Problem of Explanatory Depth

With a factual question, it is relatively easy to self-assess knowledge. You simply try to think of the answer, and if it comes up, you probably know it. The only risk here is that you have mistaken confidence in a wrong answer. With explanations, you can bring up many different pieces of knowledge at different levels of detail. It’s not possible to go through every single thing you know to accurately assess your knowledge, so you cheat. Instead of using actual knowledge as your cue, you base your feeling of understanding on how easily you’re able to bring up explanatory pieces or experiences of competence with the concept in question. While this ease-of-processing judgement isn’t a terrible mechanism, it’s not a perfect one. It can lead you to confuse frequent exposure with deep understanding. It can also lead you to confuse competent use with competent understanding.Can You Draw a Bicycle or Can Opener?

like bicycles or can openers.

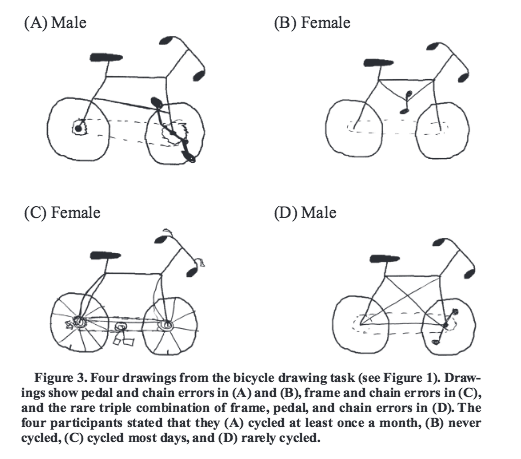

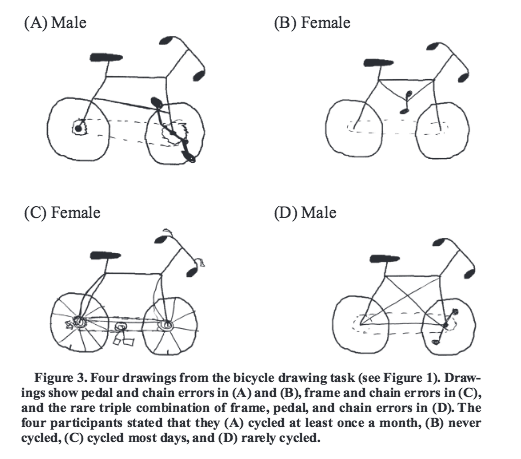

Without looking at a picture of one, could you successfully draw a picture of a bicycle? I’m not looking for artistic achievement, just a simple drawing that has the pedals, handles, frames and gears in the right places.

In one of the most interesting studies I’ve encountered in cognitive science so far, researchers asked participants to do exactly this. To everyone’s surprise, most people can’t do this! Typical drawings have numerous errors, many of which would render a bike completely non-functional, such as connecting both wheels to the gears or not connecting the gears with the pedals.

It’s easy to look at these pictures and laugh, but the reason this happens is quite interesting. Bicycles are common. Most people know how to ride one. This results, upon introspection, in a fairly easy processing of bike-related ideas. This ease-of-processing is, incorrectly, translated as evidence of understanding how a bike works mechanically.

Another great example of this is a can-opener.

Could you describe how one works?

It’s easy to look at these pictures and laugh, but the reason this happens is quite interesting. Bicycles are common. Most people know how to ride one. This results, upon introspection, in a fairly easy processing of bike-related ideas. This ease-of-processing is, incorrectly, translated as evidence of understanding how a bike works mechanically.

Another great example of this is a can-opener.

Could you describe how one works?

What can you do to

improve your metaknowledge?

It’s easy to look at these pictures and laugh, but the reason this happens is quite interesting. Bicycles are common. Most people know how to ride one. This results, upon introspection, in a fairly easy processing of bike-related ideas. This ease-of-processing is, incorrectly, translated as evidence of understanding how a bike works mechanically.

Another great example of this is a can-opener.

Could you describe how one works?

It’s easy to look at these pictures and laugh, but the reason this happens is quite interesting. Bicycles are common. Most people know how to ride one. This results, upon introspection, in a fairly easy processing of bike-related ideas. This ease-of-processing is, incorrectly, translated as evidence of understanding how a bike works mechanically.

Another great example of this is a can-opener.

Could you describe how one works?

Could you describe how one works?

Writer and former AI-researcher David Chapman did this test himself and fell for the same trick:

I did this after reading the Rozenblit paper, and was surprised to find that my explanation had some details wrong, and significant missing parts. I also discovered, after playing with two ordinary manual crank-turning can openers, that they worked on completely different principles. I’ve used both types a million times, and never noticed this, because you use them exactly the same way. My rating of my original understanding went from 6 to 3. I’m estimating my new understanding at 6, but I’m worried I’m still overconfident! [link added]Can openers are a great example because we use them a lot, they appear simple and the mechanism itself isn’t hidden. This all contributes to a heightened illusion that we understand them better than we do, in fact.

Improving Your Metaknowledge

improve your metaknowledge?

First, don’t trust yourself that you understand something.

Test it.

Improving Metacognition and Metaknowledge

Metaknowledge is part of a broader concept known as metacognition.metacongition, which can be loosely translated to mean thinking about thinking. This includes not only metaknowledge, but also how the learner thinks about choosing strategies for effective learning, self-regulation and error correction. The whole enterprise of learning how to learn better can be said to be an effort to improve metacognition or metacognitive strategies.

Some research finds that metacognition has an independent explanatory effect on learning performance beyond IQ. This implies that metacognitive abilities, might be more than just being smart, but represent a unique ability we use to improve learning.

Metacognition can be broken down into two particular abilities: monitoring and control. Monitoring, which includes metaknowledge, is the self-awareness of

what you know and how well you’re learning.

what you know.

Control takes the insights of monitored cognition and applies it to

change your behavior.

What is Declarative Metaknowledge?

Declarative metaknowledge is the easiest to understand of the three components. It simply represents how well you know what it is you know. Poor declarative metaknowledge means you have difficulty determining what you know and what you don’t.

change your behavior.

Many metacognitive improvements are relatively straightforward. If you feel like you’re not paying attention well (metacognitive monitoring) you may then decide to take notes or tune out distractions (metacognitive control).

Other metacognitive improvements comes from having a broader knowledge of different ways one can approach a learning problem and a

sense of their differed effectiveness.

Metacognitive Control

There’s different types of control that take place in learning. Planning before the learning takes place. Monitoring and adjustment during the learning task. Finally, evaluation and error-correction that take place after one has learned.sense of their differed effectiveness. A clear example of this is the research by Rabinowitz, Ackerman, Craik and Hinchley (1982) which showed that students who used visual imagery to remember words, remembered far more that those who used only repetition. However their individual judgements of learning were the same in both cases.

My efforts to uncover and promote learning methods essentially fall into this category of metacognitive improvements. It’s my belief that knowing more approaches to learning as well as why and how they work gives the learner a way to handle more and more problems effectively.

Tips for Improving Metaknowledge

Test it.

Practice testing is one of the only two studying methods investigated by this comprehensive meta-analysis to have strong empirical support for its use. (The other was

distributed practice.

Walking through an explanation can be a good way to

immediately find holes in your understanding.

distributed practice, the idea of spacing your studying sessions over many smaller intervals instead of cramming in one big chunk.

)

Practice circumvents the need to rely on imperfect cues of metaknowledge. Instead of relying on ease of processing, familiarity or fluency to decide whether you understand something, you face down actual problems that test your knowledge.

Not every subject you want to understand will have practice problems, but practice, generally construed, is possible

with almost any subject.

with almost any subject.

How to Practice Without Questions

When I mention the robust research supporting practice in learning, many interested learners bemoan that this might work for math or physics with ample problem sets, but what about history, art, psychology or any numerous other subjects without well-defined problem sets and solutions to test oneself on? My answer to this is that everything you are learning has some end-use situation. This doesn’t mean that all knowledge is ultimately practical, in some hands-on sense. Many times, what you want to learn is for the purpose of learning other things or in participating in intellectual discussions. I’m curious about art history, not because it has real practical significance, but so that I can interpret what I see in an art museum or participate in discussions about those topics. The answer of how to practice these subjects is embedded in their end-use case. As an example, if I want to assure myself I really understand some psychology, and my reason is that I want to be able to discuss it in another context, a good practice task would be to be able to write an article about the topic in question. When the real-use case is too onerous, I might substitute it with a modified, related practice task.

related practice task.

I usually like to think of the real-world usage case as a starting point in the reflection of how to practice, not always an end-point. When learning, however, there can be two good reasons to deviate from this starting point: drills and accessibility. Drills allow you to focus on subproblems of the learning

activity more directly.

Practice may not always be obvious, but figuring out how to practice inevitably involves asking deep questions about your motivations for learning.

activity more directly.

Accessibility allows you to practice more effectively when real-use practice is

difficult to obtain.

Using Drills

A good learner first starts by thinking about what the real-use case is for knowledge. This is because if you copy the learning strategies of someone who has different aims, you may end up learning inefficiently. This might sound esoteric, but it’s really a common, practical problem.

common, practical problem.

Consider learning a language. There are many, many different reasons to learn a language. You might want to learn to travel easily. To work in a different country. To become a translator. To watch foreign movies or read foreign literature.

All of these goals have considerable overlap. But they also differ in emphases. When Vat and I were traveling in China to learn Mandarin, for instance, one of my goals was eventually being able to read literature. Vat had no interest in this. As a result, we opted for somewhat different starting strategies. I pursued an approach that involved both character learning and spoken practice, while his focused exclusively on the latter.

However, expert practice often

doesn’t spend most of its time on real-use cases.

doesn’t spend most of its time on real-use cases.

Elite musicians don’t just play a lot of music. Basketball players don’t just play games. They do drills.

The reason why is that drills allow them to focus their learning efforts on the particular sub-problems of learning which are creating the most difficulty. These are then integrated backwards into the whole learning pursuit. This is a difficult activity that requires considerable self-awareness and often benefits from guided coaching.

Deliberate Practice and Drills

In the literature on mastery Anders Ericsson makes it clear that a big difference between elite performers and those of middling achievements is the amount of time spent on deliberate practice. This type of practice is directly put in contrast with “real use” situations. Given this prominent contrast, why am I nonetheless advocating real use as a reasonable way to learn things well? In my mind, real-usage is a starting point, not a destination. What Ericsson notes is that real usage (say playing games for an athlete or complete songs for a musician) isn’t insufficiently focused. Imagine that you’re trying to become an elite violinist. Any given song may only have a few key tricky parts, with the rest being within the threshold of your current ability. Playing a piece that takes several minutes may only really test your ability a small percentage of the time. By doing drills, you increase that to nearly one-hundred percent. Additionally, focusing on drills allows one to more closely monitor and correct one’s performance. In a basketball game, your cognitive resources are split between many different factors requiring your attention. Doing a layup drill, however, you can focus all of your mental resources exclusively on the mechanics of that one action. Note, however, that all of these activities support the end use–namely, playing actual games or pieces. The performer (or coach) will decide what subproblems of the learning task require attention and focus learning on those areas.

difficult to obtain.

Modifying Learning for Accessibility

Modifying practice for accessibility is a common practice because the real-use case might not be easy to access or participate in. For instance, your end goal for knowledge might be to use the knowledge in a writing situation. But writing an essay for every concept you learn would take way too much time. As a result, you substitute the real-use case with a different practice activity that might be flawed, but benefits from being easier to apply. In this case, you might opt for pausing after each chapter to speak a brief summary of the ideas to yourself. These modified activities are often weaker than the full practice. This means that they might miss important elements of the original practice activity. However, because they are easier, they might occupy more practice time than the real-use situation.

more practice time than the real-use situation.

For an example of how this might play out, imagine you’re trying to learn AI and deep learning. There’s so many concepts that you decide to triage your efforts. You know that implementing a real-world AI application is the end-use. But you decide that easier, substitute activities are: doing a mock problem, explaining the concept to yourself and just reading the material. You then decide to split your time so on a few of the most useful ideas you do a real implementation, on more of the ideas you do some kind of toy problem, on many of the smaller details you just do a self-explanation and finally for a bulk of the details you satisfy yourself to just have read the material.

immediately find holes in your understanding.

Ultimately, don’t be convinced that just because you feel like you understand something, that you

really know the full story.

The Feynman Technique

One of my favorite methods for this is something I call the Feynman Technique.

Feynman Technique.

This process was inspired by reading Richard Feynman’s autobiography where he detailed a problem he had understanding a difficult physics paper. His sister told him to go back and read through the paper as if he was a student at school:

You can see a demo here:

This technique, without further specifications, can still create problems of explanatory depth, but these can be remedied by being careful in

specifying the context and scope.

“No, what you mean is not that you can’t understand it, but that you didn’t invent it. You didn’t figure it out on your own way, from hearing the clue. What you should do is imagine you’re a student again, and take this paper upstairs, read every line of it, and check the equations. Then you’ll understand it very easily.” [emphasis in original]While I’m uncertain on the exact details of how Feynman did this, my own process has a similar outcome. Instead of throwing your hands up to say you don’t understand something, go through it systematically to find out what you don’t understand.

specifying the context and scope.

How to Prevent Explanatory Depth Issues with the Feynman Technique

One problem I often encountered during the MIT Challenge was that I’d encounter a concept I poorly understood in a lecture, I would run through the Feynman Technique on it, feel better, but… still not be able to solve problems on it. The issue here is at the heart of the concept of this article. I was able to fill in some details of my explanation, but not to the level that was needed to solve the problem. As a result, I had the false picture of metaknowledge. Luckily this can be corrected for quite easily just by being more careful in constraining the scope. Once again, it comes down to purpose–why are you trying to use this technique? For me, there’s a few good reasons: 1. I want to have a better gist of the concept.1. I want to have a better gist of the concept. This can happen when I feel like I don’t have a good intuitive understanding of what an idea is trying to say, what it represents or how it applies. When I was first learning about voltage, for instance, I didn’t have a good mental picture for what it was. Doing a Feynman allowed me to form an analogy with height when thinking about gravitational forces.

2. I want to solve a particular type of problem.

2. I want to solve a particular type of problem. You might have a particular problem type you want to solve. In this case, you should try to walk through step-by-step of what to do when solving the problem.

3. I don’t understand a particular detail.

3. I don’t understand a particular detail. In this case, focus on understanding that detail. Don’t expect that doing a high-level intuitive visualization will resolve an issue that hinges around a particular detail. Conversely, resolving a detail may not give you a high-level understanding.

Metaknowledge ultimately revolves around having these pings of self-awareness. When you encounter difficulties, retreating to broad questions like, “What am I trying to accomplish?” or, “What is standing in my way?” are often the keys that lead you to concrete methods that give answers.

Knowledge Comes First From Self-Doubt

really know the full story.

Often simple ideas and explanations have complex underpinnings. This is where the problem of explanatory depth and failures of metaknowledge are most apparent. Because the summary version is simple, it leads one to believe that the deeper understanding is also simple, when reality might actually be more complicated.

As a good example of this, consider the difference between physics and psychology. Many people informally weigh in on the latter all the time (myself included), but would be reluctant to speak authoritatively about the former. The reason is that psychological ideas, at a zoomed-out view, look more familiar and obvious than those in physics. As a result, we’re inclined to believe that we really understand what’s going on all the way down. In practice, it’s often these fields that are even more complicated.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.